By Dr. Ahmed Hassoon and Rachel Reed.

Artificial Intelligence (AI) may hold a promise to redefine the landscape of healthcare, with the potential to augment patient outcomes, streamline operational processes, and strengthen research capabilities. However, the journey to harness the full potential of AI in healthcare is fraught with both internal and external implementation challenges. As with any major shift in the sociotechnical systems of healthcare, potential difficulties exist. The roll out of AI in healthcare is no exception and will be met with healthy skepticism, resistance to change, a deficiency of understanding of AI technology, and apprehensions regarding data privacy, security, and ethics. These issues are further exacerbated because we are struggling to simply speak with each other and to understand many of the features and flaws of this quickly evolving technology. If not addressed, these problems will limit the utilization and positive impact of AI technologies in healthcare settings.

How can we bridge these challenges?

Guiding Universal Communication Principles

One of the most significant barriers to the effective implementation of AI in healthcare is the absence of universal communication principles. The absence of a standardized communication strategy will lead to the propagation of inaccurate information and disinformation. Within the context of the recent surge in accessibility to AI technologies, a lack of concretized communication standards can distort individual and societal perceptions and dampen enthusiasm for these transformative technologies; downstream impacts of which have already been accompanied by a substantial public reaction to AI-related news.

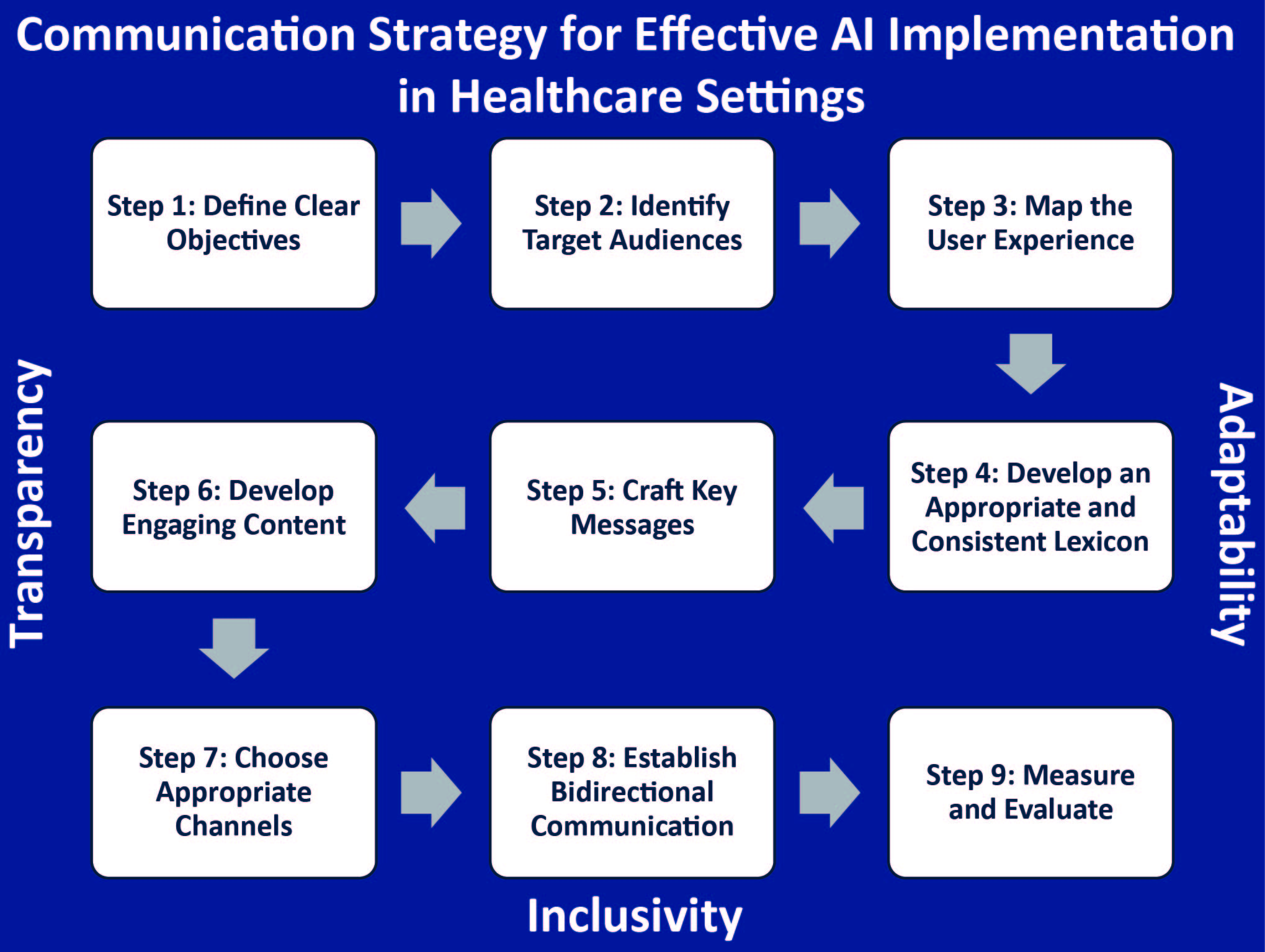

Shown in the figure below, we have developed a proposed essential framework for guiding communication strategies for the successful implementation of AI in healthcare is predicated on three foundational principles: 1) transparency, 2) inclusivity, and 3) adaptability. Transparency necessitates forthright communication regarding the capabilities, limitations, and potential ramifications of AI technologies. Inclusivity emphasizes the imperative of engaging a comprehensive spectrum of stakeholders in the AI journey, encompassing healthcare providers, patients, administrators, and policymakers. Adaptability entails maintaining abreast of the latest advancements in AI and exhibiting responsiveness to the evolving needs and expectations of stakeholders.

How Many Steps to AI Integration?

The application of these three foundational principles to the integration of AI in the domains of patient safety and quality of care will require several key steps:

1) It is imperative to articulate the specific goals and objectives of implementing AI technology, such as augmenting patient outcomes, enhancing operational efficiency or advancing research capabilities.

2) It is crucial to identify the key stakeholders and groups that will be directly impacted by AI implementation, and map their experiences.

3) It is necessary develop an appropriate and consistent lexicon, and to tailor messaging and engaging content to address specific stakeholder concerns and needs.

4) It is important to establish bidirectional channels for stakeholders to provide feedback on the AI technology and its implementation and to provide timely responses to feedback.

5) It is crucial to systematically measure and evaluate the communication strategy to ensure its effectiveness and to identify areas for improvement.

At its core, this comprehensive strategy aims to facilitate the smooth integration of AI into healthcare settings and to enhance the ability to identify and address challenges. Collectively, we expect that the adoption of this holistic approaches within the healthcare sector will yield improvement in the quality of patient care by harnessing the full transformative capacity of AI as a member of the health care team to ensure maximal benefit patients, practitioners, and health systems as a whole.

About the authors:

Ahmed Hassoon, MD, MPH, PMP is an Assistant Research Professor at Johns Hopkins Bloomberg School of Public Health. His research focuses on the application of data science and artificial intelligence in diagnostic safety.

Rachel Reed is an MPH student at the Johns Hopkins Bloomberg School of Public Health. She is a communications strategist with experience across various health sector disciplines.

The opinions expressed here are those of the authors and do not necessarily reflect those of The Johns Hopkins University.

It is always interesting to see how people find different approaches to solving various tasks. Sometimes you encounter similar situations in completely unrelated areas, and the method of overcoming obstacles largely determines the end result.

By the way, it reminded me of the importance of being prepared for unforeseen difficulties, especially in winter on the road. In such cases, snow chains become a real salvation, allowing you to confidently move forward even on the most difficult sections.

과도한 소비는 자원 소모와 환경 파괴를 초래할 수 있으며, 상품권 카드결제 소비주의적인 가치관은 소수의 소비에만 초점을 맞추어 경제적 불평등을 증가시킬 수 있을 것이다. 따라서, 쇼핑을 할 때는 지속 할 수 있는 한 소비를 실천하고, 본인의 필요에 따라 적당하게 결정하는 것이 중요합니다.

[url=https://zeropin.co.kr/]상품권 카드결제[/url]

смотреть здесь https://bonus-betting.ru/greenada-casino-promokod/

k-biz pro guide However, around the system of that time he bets roughly three game titles on a daily basis on all big sports. His full games guess could be 3285 more than that time span.

[url=https://www.kodyglobal.com/]hanteo family[/url]

과학기술아이디어통신부는 1일 올해 연구개발(R&D) 예산 규모와 활용 단어를 담은 ‘2024년도 연구개발산업 종합실시계획’을 공지하며 양자테크닉을 16대 중점 투자방향 중 처음으로 거론했다. 양자기술 분야 R&D 예산은 2025년 323억 원에서 올해 695억 원으로 증액됐다.

[url=https://exitos.co.kr/]3D 시제품 제작[/url]

https://skladovka.ua/

Optimize your cognitive function with trusted solutions. Find [URL=https://suddenimpactli.com/levitra/]levitra 10mg[/URL] for boosted focus, available on the web.

Discover

Xperience enhanced cardiovascular health by managing your symptoms efficiently. Opt to purchase your medication through https://98rockswqrs.com/item/hardon-oral-jelly-flavoured/ , a reliable option for patients seeking optimal stroke prevention.

Relieve your chronic heart and pain conditions easily by choosing to order [URL=https://yourbirthexperience.com/item/vidalista/]acheter en ligne vidalista[/URL] , the go-to option for managing your health better.

кликните сюда [url=https://kkrak36at.at]kra37 at[/url]

Смотреть здесь [url=https://krak-37.at]kraken37[/url]

подробнее здесь [url=https://kra37cc.cc/]kra37 сс[/url]

https://gepatunb.com/the-most-visually-stunning-games-at-spinanga-4/

ссылка на сайт [url=https://kra37cc.cc]кракен клир[/url]

зайти на сайт [url=https://kra37cc.cc]кракен купить[/url]

Manage your HIV effectively with [URL=https://marcagloballlc.com/drugs/walmart-tadalafil-price/]tadalafil 10mg[/URL] , a vital component in care regimens. This budget-friendly option ensures accessibility to high-quality medication for everyone in need.

Discover the benefits of low price nizagara for heart health and stroke prevention. Obtain the essential pharmaceutical through the internet today and reap its protective effects.

Browse

Very often, individuals seek cost-effective solutions for their medical needs. Discovering a reliable platform to acquire essential medications can significantly reduce expenses and ensure consistent quality. For those looking to secure their pharmaceuticals conveniently, opting to [URL=https://nabba-us.com/product/generic-retin-a-in-canada/]canadian pharmacy retin a[/URL] can be a remarkably prudent choice, offering both affordability and the assurance of receiving genuine products.

узнать [url=https://kra37cc.cc/]кракен даркнет[/url]

другие [url=https://kra37at.at]Kra36.cc[/url]

No more will have to Instagram views kaufen users be confined to their particular voices while talking to family members, talking about small business matters or conducting prolonged-length interviews.

[url=https://snshelper.com/de]Facebook Follower kaufen[/url]

그는 이름과 사는 곳, 연락처까지 알고 있다는 기자의 말에 “최소 10일 정도 걸리고 비용은 80만원 정도로 책정완료한다”고 답했다. 부산흥신소들은 의뢰 고객을 미행해 동선을 파악하거나 대중 주소지 및 연락처를 알아봐 주는 게 주 업무인데 의뢰인들이 의뢰 대상에 대한 아이디어를 구체적으로 많이 보유하고 있을수록 돈이 절감한다.

[url=https://www.ten-ten.co.kr/]흥신소[/url]

무리한 소비는 자원 소모와 환경 파괴를 초래할 수 있으며, 스타벅스 상품권 현금화 소비주의적인 가치관은 소수의 소비에만 초점을 맞추어 경제적 불평등을 증가시킬 수 있다. 따라서, 쇼핑을 할 경우는 지속 최소한 소비를 실천하고, 고유의 니즈에 준순해 적당히 선택하는 것이 중요하다.

[url=https://24pin.co.kr/]컬쳐랜드 상품권 매입[/url]

веб-сайт [url=https://kra37cc.cc]кракен открыть[/url]

взгляните на сайте здесь [url=https://lootdonate.ru]купить код на вбаксы[/url]

To secure your health's safety, always select authentic medication. For those looking to acquire antiretroviral treatment, [URL=https://ormondbeachflorida.org/buy-cheap-cialis/]cialis 5mg[/URL] offers a reliable option.

Acquire

Obtaining https://tv-in-pc.com/pill/lasix/ has never been simpler. Purchase this crucial therapy with just a few clicks.

If you're looking for an effective solution to combat bacterial infections, think about [URL=https://tei2020.com/amoxil/]amoxil from canada[/URL] . This antibiotic is well-known for its effectiveness against a wide array of bacteria.

알트코인 가격이 월간 기준으로 10년 만에 최대 낙폭을 기록하며 '잔인한 6월'로 마감할 것이라는 분석이 제기됐습니다. 현지시간 28일 자동매매 외신의 말에 따르면 가상화폐 가격은 이달 들어 현재까지 39% 넘게 폭락해 2013년 3월 잠시 뒤 월간 기준 최대 하락 폭을 기록했습니다.

[url=https://uprich.co.kr/]자동매매[/url]

모바일 신세계 구매는 당월 이용한 결제 자금이 핸드폰 요금으로 빠져나가는 구조다. 결제월과 취소월이렇게 경우 스마트폰 요금에서 미청구되고 승인 취소가 가능하다. 다만 결제월과 취소월이 다를 경우에는 핸드폰 요금에서 이미 출금됐기 덕분에 승인 취소가 불가하다.

[url=https://xn--989awoz98btnal2e708b.net/]신세계 상품권현금화[/url]

перейти на сайт [url=https://krak-37.at/]kra36 at[/url]

перейдите на этот сайт [url=https://kra37cc.cc]кракен клир[/url]

подробнее [url=https://krak-37.at/]kraken войти[/url]

I've discovered the most cost-effective way to acquire your medication. Check [URL=https://tei2020.com/drugs/cialis/]tadalafil[/URL] now!

choosing

Your search for affordable and reliable Etizolam ends here with https://umichicago.com/etibest-md/ . Whether you're looking to obtain sedation, our digital drugstore offers unparalleled value.

Curious about enhancing your vitality? Consider trying out [URL=https://karachigo.com/kamagra-to-buy/]kamagra lowest price[/URL] . This medication, available for purchase, might just be the key for those seeking to improve their wellness.

ссылка на сайт [url=https://krak-36.at]kraken market[/url]

Journal Entry:

Each night under the cascading cascade of neon lights, I surrender to the rhythm, my body moving with an ancient, primal grace. I've seen fifty-four winters, my skin bears their testament, but as I danced on the glistening floor of the nightclub, youth came beckoning.

Curiosity, they say, is a young man's game. Age often replaces this with complacency, but not for me. It’s this curiosity that’s kept me alive, pulsating on the dance floor with a heartbeat as rhythmical as the penetrating beats echoing through the speakers. It feels like the music flows through my veins, making my heart skip to its own tune. Here in the midst of the confluence of sounds, desires, and aspirations, I am reborn each night.

One evening, a new face appeared in the crowd, his eyes were like the smoky haze in the club, veiled but alight with mystery. The intrigue tugged at my curiosity. He lingered at the edge of the dance floor, his gaze following my every move. He was different, he was a spark amidst the flames. My heart felt him, not in the throbbing way it felt the music, but like a quiet strum of a lonely guitar on a rainy night, serene and unsettling at the same time.

As my performance ended that night, the audience broke into applause. I've been on this stage for so many years, their claps and cheers just faded noise. But his silence, his silence was loud and clear. It was as if he was speaking in a code only my soul could decrypt. After the show, I found myself drawn to him like a moth to a fire. My heart still prancing to that silent guitar. His presence was magnetic, and the mystery he embodied piqued my curiosity.

“We should dance together sometime," I said, sounding casual than I felt. The way his eyes gleamed was promising. As we exchanged numbers, I felt a spark of excitement. Little did I know that this spark would be the beginning of something profound. Something that would shake not just me but the world out here. The next day, he returned to the club, our dance was filmed, and the video posted online. It was a moment of understanding, connection, and raw human passion. There was a realness interwoven within our movements that spoke louder than any word could. The following morning, waking up to notifications, I realized, this one’s gone viral.

Who knew that a seasoned dancer like me had more to experience, more to feel, and definitely more dances to dance? This journey is beautiful, the curiosity and mystery more intoxicating than the spirits they serve here. Life exists on this dance floor, amidst the pulsing music, shared emotions, under the warm, embracing neon glow. In fifty-four years, I have loved, lost, been heartbroken, and reborn. Yet, here I am, moving along to the music, falling in love, curious as ever, unraveling mysteries, feeling things I'd never felt — aging not just in years but in experiences. [url=https://anussy.com/][img]https://san2.ru/smiles/smile.gif[/img][/url]

wethenorth-tor-market.cc

Смотреть здесь [url=https://krak-36.at]кракен открыть[/url]

здесь https://kra36at.at

сюда [url=https://krak-37.at/]kra36[/url]

здесь [url=https://krak-37.at/]kra37[/url]

перенаправляется сюда https://kra36at.at

Managing discomfort or fever doesn't have to be a struggle. Explore how you can acquire effective relief with [URL=https://rrhail.org/product/malegra/]malegra commercial[/URL] , your go-to solution for alleviating pain or fever swiftly.

Secure

Browse our platform to locate your drugs at unbeatable prices. Whether you need to buy https://seraamedia.org/product/doxycycline/ or more health care solutions, we've got you covered.

과학기술정보통신부는 1일 올해 실험개발(R&D) 예산 덩치와 사용 뜻을 담은 ‘2029년도 테스트개발사업 종합시행계획’을 통보하며 양자기술을 12대 중점 투자방향 중 처음으로 거론하였다. 양자기술 분야 R&D 예산은 2027년 328억 원에서 올해 698억 원으로 증액됐다.

[url=https://exitos.co.kr/]3D 시제품 제작[/url]

Подробнее здесь https://kra36at.at/

Web Site [url=https://web-jaxxliberty.com/]jaxx liberty[/url]

подробнее https://kra36at.at

Browse our website to buy top-tier medical supplements, including [URL=https://fontanellabenevento.com/product/lasipen/]cheap 40 lasipen online[/URL] , safely.

Discover reliable solutions for your stomach problems with super filagra en ligne , a choice recommended by medical experts.

Secure your needs easily via the web.

Looking to acquire allergy relief without breaking the bank? Click here to [URL=https://fontanellabenevento.com/product/lasipen/]lasipen[/URL] and start experiencing relief today.

Смотреть здесь [url=https://krak-37.at]кракен открыть[/url]

Crime

U.S. charges sibling leaders of ruthless Mexico cartel, offers $8 million reward for information leading to their capture

Updated on: April 16, 2025 / 7:02 AM EDT / CBS/AP

[url=https://kraken2trfqodidvlh4aa337cpzfrhdlfldhve57instad.com]kraken7jmgt7yhhe2c4iyilthnhcugfylcztsdhh7otrr6jgdw667pqd[/url]

Federal authorities said Tuesday that they have indicted the top two leaders of a Mexican drug cartel and are offering up to $8 million rewards for information leading to their capture and conviction.

[url=https://kraken2trfqodidvlh4aa337cpzfrhdlfldhve5nf7njhumwr7insta.com]кракен ссылка официальная в тор[/url]

Johnny Hurtado Olascoaga and Jose Alfredo Hurtado Olascoaga, are accused of participating in a conspiracy to manufacture cocaine, heroin, methamphetamine and fentanyl and importing and distributing the drugs in the United States, authorities said during a news conference in Atlanta. The newly unsealed three-count indictment was returned by a grand jury in September.

[url=https://kraken3yvbvzmhytnrnuhsy772i6dfobofu652e27f5hx6y5cpj7rgydonion.net]kraken5af44k24fwzohe6fvqfgxfsee4lgydb3ayzkfhlzqhuwlo33ad onion[/url]

The two brothers are the leaders of La Nueva Familia Michoacana, a Mexican cartel that was formally designated by the U.S. government in February as a "foreign terrorist organization," authorities said.

"If you contribute to the death of Americans by peddling poison into our communities, we will work relentlessly to find you and bring you to justice," Attorney General Pam Bondi said in a statement.

The State Department is offering up to $5 million for information leading to the arrest and/or conviction of Johnny Hurtado Olascoaga and up to $3 million for information about Jose Alfredo Hurtado Olascoaga, who also goes by the name "The Strawberry." Both men are believed to be in Mexico, officials said.

Separately the U.S. Treasury announced new sanctions Wednesday against the two men and well as two other alleged leaders of the cartel, which the U.S. designates as a "foreign terrorist organization."

In addition to drug trafficking, the Familia Michoacana cartel has also engaged in extortions, kidnappings and murders, according to U.S. prosecutors.

kraken через tor

https://kraken5af44k24fwzohe6fvqfgxfsee4lgydb3ayzkfhlzqhuwlo3ad.com

Добрый день!

Хочу поделиться счастливой и красивой новостью для всех у кого намечается важное и ответственное мероприятие или торжество.

Без подарков не обходится ни одно юбилейное и торжественное событие.

Да и вообще, это отличный и надежный способ вложения денег.

В общем, если вам интересно найти, ювелирные украшения: золотые браслеты, кольца, кулоны, серьги, броши и т.д., например:

[url=https://zhannakangroup.com/]женские ювелирные украшения[/url]

Тогда вам срочно нужно прямо сейчас перейти на сайт онлайн-бутика и интернет магазина ZhannaKanGroup и узнать все подробности по ювелирные изделия и украшения, такие как золотой кулон, кольцо, браслет, серьги, брошь, подвеска и т.д. https://zhannakangroup.com/ .

Вы удивитесь, но выбор просто огромнен и разнообразен, даже есть не только стандартное золото, но так же черное и белое золото.

В это трудно и сложно поверить, но выбор действительно разнообразен и огромен, в прямом и буквальном смысле тысяси ювелирных украшений, например:

[url=https://zhannakangroup.com/]ювелирные броши из золота[/url]

Это не просто интернет магазин, а обширная и постоянно развивающаяся сеть стильных бутиков имеющая 7 настоящих бутиков в городах Шымкет, Астана, Алматы и Ташкент.

Увидимся!

Quest for the most affordable rates on Erythromycin? [URL=https://umichicago.com/drugs/moduretic/]moduretic canada[/URL] has unmatched deals for you.

choosing

Consider ordering your drugs conveniently from https://americanazachary.com/cenforce/ , featuring an extensive range of medical solutions.

X-ray your options and buy [URL=https://umichicago.com/drugs/moduretic/]moduretic cheap[/URL] from reputable sources to control HIV effectively and safely.

컬쳐랜드현금화는 그런가하면 마이크로 페이먼트의 한 형태로, 일상 송금에도 활용됩니다. 예를 들어, 카페에서 커피를 사고 싶은데 카드를 가지고 있지 않을 때, 핸드폰 앱을 통해 카페에서 소액 결제를 하여 간편하게 결제할 수 있을 것입니다. 이와 같이 결제 방법은 소비자들의 편의를 높이면서 동시에 산업자들에게도 이익을 공급합니다.

[url=https://mypinticket.co.kr/]롯데현금교환[/url]

Zero in on unparalleled deals for your health needs; uncover unrivaled offers on [URL=https://winterssolutions.com/item/slimonil-men/]slimonil men buy online[/URL] now.

Purchase

Browse our site to locate https://karachigo.com/amoxicillin/ , ensuring you secure top-quality antibiotic choices at matchless prices.

Zero in on soothing your eye discomfort with unique formulations! For instant relief from inflammation and irritation, locate your solution with [URL=https://usctriathlon.com/low-cost-xenical/]xenical 120mg[/URL] . This trusted solution is essential to those seeking reduction in eye discomfort without straying from their budget.

check out here [url=https://web-jaxxliberty.com]jaxx liberty[/url]

перейдите на этот сайт https://kra36at.at/

지난해 국내 온/오프라인쇼핑 시장 덩치 161조원을 넘어서는 수준이다. 미국에서는 이달 26일 블랙프라이데이와 사이버먼데이로 이어지는 연말 중고 명품 쇼핑 계절이 기다리고 있습니다. 다만 이번년도는 글로벌 물류대란이 변수로 떠상승했다. 전 세계 제공망 차질로 주요 소매유통기업들이 제품 재고 확보에 하기 어려움을 겪고 있기 때문인 것이다. 어도비는 연말 계절 미국 소매업체의 할인율이 작년보다 5%포인트(P)가량 줄어들 것으로 예상했다.

[url=https://kangkas.com/]중고명품 전문매장[/url]

Full Report [url=https://web-jaxxliberty.com/]jaxx wallet[/url]

можно проверить ЗДЕСЬ [url=https://krak-36.at]kraken официальный сайт[/url]

비트코인 가격이 월간 기준으로 80년 만에 최대 낙폭을 기록하며 '잔인한 5월'로 마감할 것이라는 해석이 제기됐습니다. 현지시간 25일 비트겟 셀퍼럴 외신의 말에 따르면 비트코인(Bitcoin) 가격은 이달 들어 그동안 32% 넘게 폭락해 2017년 4월 바로 이후 월간 기준 최대 하락 폭을 기록했습니다.

[url=https://gomzipayback.com/]바이낸스 셀퍼럴[/url]

Boost your wellness with pure supplements; order your [URL=https://fpny.org/item/amoxicillin/]buy amoxil from google[/URL] from our website for optimal health benefits.

Acquire

Discover the pathway to improved well-being with https://marcagloballlc.com/drugs/xenical/ , your go-to for holistic solutions.

Zest for life can be regained with ease. Purchase your osteoporosis treatment with confidence [URL=https://goldenedit.com/propranolol/]propranolol[/URL] .

과도한 소비는 자원 소모와 환경 파괴를 초래할 수 있으며, 신세계상품권 매입 소비주의적인 가치관은 소수의 소비에만 초점을 맞추어 금전적 불평등을 증가시킬 수 있을 것입니다. 그래서, 쇼핑을 할 경우는 계속 최대한 소비를 실천하고, 개인의 욕구에 준순해 적당하게 고르는 것이 중요합니다.

[url=https://24pin.co.kr/]스타벅스 e쿠폰[/url]

Discover unparalleled vitality; unlock your potential with our [URL=https://marcagloballlc.com/drugs/kamagra/]kamagra 100mg[/URL] . Elevate your life quality, experience enhanced performance and well-being. Don't wait; purchase yours!

Acquire your pathway to enhanced wellness promptly.

Venture into the world of affordable healthcare solutions by opting to https://tei2020.com/viagra-without-a-prescription/ from our trusted supplier. This option not only promises superiority but also savings for those in demand.

Obtain comprehensive insights and secure the [URL=https://nabba-us.com/drugs/lasix/]lasix without prescription[/URL] for your antiviral medication needs by browsing our site today.

Цифровое поколение

Современная молодёжь — это поколение онлайн, которое выросло в эпоху технологий. Они легко адаптируются к новому, и для них цифровой мир — это часть повседневной жизни.

Социальная активность

Современная молодёжь всё чаще занимается активизмом. Для них важно менять мир.

Семья и отношения

Представления о семье меняются. Молодёжь сегодня разрушает стереотипы о ролях. Главное — эмоциональная зрелость.

Ищете Кракен сайт? Вам нужна официальная ссылка на сайт Кракен? В этом посте собраны все актуальные ссылки на сайт Кракен, которые помогут вам безопасно попасть на Кракен даркнет через Tor.

Рабочие ссылки на Кракен сайт (официальный и зеркала):

• Актуальная ссылка на сайт Кракен: [url=https://kra365.cc]kra34.at[/url]

• Последняя ссылка на сайт Кракен: [url=https://kro33.cc]kra34.cc[/url]

1. Официальная ссылка на сайт Кракен: [url=https://kr34cc.life?c=syfa00]Кракен официальный сайт[/url]

2. Кракен сайт зеркало: [url=https://kra350.cc]Кракен зеркало сайта[/url]

3. Кракен сайт магазин: [url=https://kr34cc.life?c=syfa00]Кракен магазин[/url]

4. Ссылка на сайт Кракен через даркнет: [url=https://https-kra33.shop?c=syf9zl]Кракен сайт даркнет[/url]

5. Актуальная ссылка на сайт Кракен: [url=https://kr33cc.shop?c=syf42a]Кракен актуальная ссылка[/url]

6. Запасная ссылка на сайт Кракен: [url=https://kramarket.shop?c=syf431]Ссылка на сайт Кракен через VPN[/url]

Как попасть на Кракен сайт через Tor:

Для того чтобы попасть на Кракен сайт через Tor, следуйте этим шагам:

1. Скачайте Tor браузер: Перейдите на официальный сайт Tor и скачайте Tor браузер для Windows, Mac и Linux. Установите браузер, чтобы получить доступ к Кракен даркнет.

2. Запустите Tor браузер: Откройте браузер и дождитесь, пока он подключится к сети Tor.

3. Перейдите по актуальной ссылке на сайт Кракен: Вставьте одну из актуальных ссылок на сайт Кракен в адресную строку Tor браузера, чтобы попасть на Кракен даркнет сайт.

4. Регистрация на сайте Кракен: Зарегистрируйтесь на Кракен официальном сайте. Создайте аккаунт, используя надежный пароль и включите двухфакторную аутентификацию для повышения безопасности.

Меры безопасности на сайте Кракен даркнет:

Чтобы ваш опыт использования Кракен сайта был безопасным, следуйте этим рекомендациям:

• Используйте актуальные ссылки на сайт Кракен: Даркнет-ресурсы часто меняют свои адреса, поэтому обязательно используйте только проверенные и актуальные ссылки на сайт Кракен.

• VPN для дополнительной безопасности: Использование VPN для доступа к Кракен обеспечит вашу анонимность, скрывая ваш реальный IP-адрес. Выбирайте только проверенные VPN-сервисы для доступа к Кракен сайту.

• Будьте осторожны с ссылками на Кракен: Важно избегать сомнительных ссылок и проверять их на наличие фишинга.

Почему Кракен сайт так популярен?

• Кракен даркнет — это один из самых известных и популярных даркнет-магазинов. Он предоставляет пользователям безопасный доступ к анонимным покупкам, включая продукты на Кракен сайте, товары и услуги.

• Безопасность на сайте Кракен: Все транзакции через Кракен даркнет происходят анонимно, и каждый пользователь может быть уверен в защите своих данных.

• Актуальная ссылка на сайт Кракен: Для того чтобы быть в курсе актуальных ссылок, важно регулярно проверять обновления на проверенных форумах и в официальных источниках.

Постоянно обновляющиеся зеркала сайта Кракен:

Сайт Кракен обновляет свои зеркала для обеспечения безопасности. Поэтому актуальная ссылка на Кракен может изменяться. Используйте только проверенные ссылки, такие как:

• Ссылка на сайт Кракен через Тор: [url=https://http-kra33.xyz?c=syf9wq]Кракен сайт Тор[/url]

• Запасная ссылка на сайт Кракен: [url=https://kra365.cc]Ссылка на сайт Кракен через VPN[/url]

• Последняя ссылка на сайт Кракен: https://kra365.cc

Заключение:

Для безопасного доступа к Кракен сайту, следуйте приведенным рекомендациям и используйте только актуальные ссылки на Кракен. Помните, что Кракен даркнет требует особого подхода в плане безопасности. Используйте Tor, VPN, и проверяйте актуальность ссылок.

Зарегистрируйтесь на официальном сайте Кракен и получите доступ к всемирно известной даркнет-платформе.

________________________________________

Ключевые слова:

• кракен сайт

• кракен официальный сайт

• кракен сайт kr2connect co

• кракен сайт магазин

• ссылка на сайт кракен

• кракен зеркало сайта

• кракен сайт даркнет

• сайт кракен тор

• кракен рабочий сайт

• кракен актуальная ссылка

• кракен даркнет

When seeking an effective and rapid solution for depression, explore various options to purchase fluoxecare without an [URL=https://fairbusinessgoodwillappraisal.com/product/fluoxecare/]fluoxecare online usa[/URL] .

Need to manage your pain? Purchase your medication effortlessly through our platform. strattera from india offers a straightforward way to manage inflammation and pain, bringing you relief without the hassle.

Elevate

original site [url=https://web-jaxxliberty.com]jax wallet[/url]

스마트폰 컬쳐랜드 상품권 현금화는 당월 사용한 결제 금액이 핸드폰 요금으로 빠져나가는 구조다. 결제월과 취소월이와 같이 경우 스마트폰 요금에서 미청구되고 승인 취소가 가능하다. 허나 결제월과 취소월이 다를 경우에는 핸드폰 요금에서 이미 출금됐기 덕에 승인 취소가 불가하다.

[url=https://xn--zf0br2llmcu7n68ot4cl1n.com/]컬쳐랜드 상품권현금화[/url]

보은솜틀집 신뢰성과 서비스 품질은 구매 공정의 원활한 진행과 제품의 안전한 수령에 결정적인 영향을 미치기 때문인 것입니다. 따라서 소비자는 구매 대행 업체를 선택할 경우 이용후기나 평가를 참고하여 신뢰할 수 있는 업체를 결정하는 것이 중요합니다.

[url=https://cottonvillage.co.kr/]목화솜틀집[/url]

비트코인(Bitcoin) 가격이 월간 기준으로 90년 만에 최대 낙폭을 기록하며 '잔인한 3월'로 마감할 것이라는 해석이 제기됐습니다. 현지기간 26일 비트코인 자동매매 프로그램 외신의 말에 따르면 암호화폐 가격은 이달 들어 현재까지 38% 넘게 폭락해 2016년 8월 이후 월간 기준 최대 하락 폭을 기록했습니다.

[url=https://uprich.co.kr/]자동매매[/url]

navigate here https://web-jaxxliberty.com

Discover proven solutions for your health needs with [URL=https://seraamedia.org/product/retin-a/]generic retin a[/URL] . Order this medication through our website today for optimal well-being.

Offering an effective solution for pain relief, our propranolol price walmart comes highly recommended. Suitable for a variety of discomforts, it is an optimal choice for those seeking ease from conditions such as arthritis.

Secure

Buy misoprostol directly from [URL=https://youngdental.net/misoprostol/]cytotec para que serve[/URL] for managing your health efficiently.

[url=https://dbeinstitute.org]кракен даркнет[/url] - ссылка на кракен даркнет, кракен сайт

Keeping your health in optimum condition is crucial. For those seeking efficient solutions, [URL=https://karachigo.com/strattera-25mg/]cost of strattera tablets[/URL] offers a convenient way to obtain necessary medication from the comfort of your own home.

Boost your health path effortlessly.

X-plore affordable solutions for erectile dysfunction therapy with https://the7upexperience.com/drugs/fildena/ , guaranteeing quality and security.

Investigating budget-friendly solutions for managing edema? [URL=https://fpny.org/propranolol-20mg/]propranolol 20mg[/URL] provides a comprehensive overview, ensuring you get efficiency and results.

[url=https://dbeinstitute.org/]kraken зеркало[/url] - кракен актуальная ссылка, кракен официальный сайт

check out the post right here https://web-jaxxliberty.com

[url=https://shapr.net]kraken darknet tor[/url] - kraken darknet, кракен onion

마이핀는 더불어 마이크로 페이먼트의 한 모습로, 일상 송금에도 활용됩니다. 예를 들어, 카페에서 커피를 사고 싶은데 카드를 가지고 있지 않을 때, 핸드폰 앱을 따라서 카페에서 소액 결제를 하여 간편안하게 결제할 수 있을 것입니다. 이렇게 결제 방법은 소비자들의 편의를 높이면서 한번에 사업자들에게도 이익을 제공합니다.

[url=https://mypinmall.co.kr/]문화상품권구매[/url]

You can discover options for [URL=https://sunsethilltreefarm.com/item/nurofen/]nurofen in usa[/URL] online.

Now enhance your eyelash growth safely and effectively with nurofen . Securely obtain your solution on the web for lustrous lashes effortlessly.

choosing

[url=https://shapr.net]ссылка на кракен зеркало[/url] - kraken onion, кракен сайт

[url=https://thekaleproject.com]кракен рабочее зеркало[/url] - кракен онион, рабочая кракен ссылка

작년 국내 온/오프라인쇼핑 시장 크기 166조원을 넘어서는 수준이다. 미국에서는 이달 28일 블랙프라이데이와 사이버먼데이로 이어지는 연말 중고 명품 구매 쇼핑 시즌이 기다리고 있습니다. 하지만 이번년도는 글로벌 물류대란이 변수로 떠상승했다. 전 세계 제공망 차질로 주요 소매유통회사들이 제품 재고 확보에 곤란함을 겪고 있기 때문인 것입니다. 어도비는 연말 시즌 미국 소매회사의 할인율이 지난해보다 9%포인트(P)가량 줄어들 것으로 예상했었다.

[url=https://kangkas.com/]중고명품판매[/url]

you can check here https://web-jaxxliberty.com/

[url=https://actionplan.com]кракен onion[/url] - kraken tor, кракен тор

Zero in on your health with affordable solutions; discover [URL=https://nabba-us.com/product/viagra/]viagra without dr prescription[/URL] for handling your ailment. Secure trusted care easily.

Visit mail order doxycycline to acquire your antibiotic with ease.

Acquire your transformative solution on our platform. Enhance

Patients seeking relief from chronic inflammatory conditions might discover [URL=https://sci-ed.org/panmycin/]online panmycin[/URL] to be an effective treatment.

무리한 소비는 자원 소모와 배경 파괴를 초래할 수 있고, 구글기프트카드 소비주의적인 가치관은 소수의 소비에만 초점을 맞추어 경제적 불평등을 증가시킬 수 있다. 그래서, 쇼핑을 할 경우는 지속 가능한 소비를 실천하고, 본인의 필요에 따라 적절하게 선택하는 것이 중요하다.

[url=https://zeropin.co.kr/]문화상품권 소액결제[/url]

[url=https://dbeinstitute.org/]актуальная ссылка на кракен[/url] - кракен даркнет, kraken onion ссылка

[url=https://actionplan.com]kraken onion market[/url] - кракен онион, kraken onion market

[url=https://fairoa.org/]кракен официальный сайт[/url] - кракен официальный сайт, kraken darknet ссылка

https://buhprofessional.ru/

No longer should Tiktok いいね customers be confined to their unique voices when conversing with family members, speaking about organization matters or conducting prolonged-distance interviews.

[url=https://snshelper.com/jp]Facebookいいね[/url]

Цифровое поколение

Современная молодёжь — это цифровое поколение, которое выросло в эпоху технологий. Они моментально осваивают новые гаджеты, и для них социальные сети — это часть повседневной жизни.

Работа и карьера

Работа для молодёжи — это не только карьерная лестница, но и саморазвитие. Многие выбирают фриланс, стартапы или работу на себя. Офис уступает кафе.

Ментальное здоровье

Р’ РЅРѕРІСѓСЋ СЌРїРѕС…Сѓ молодёжь РІСЃС‘ чаще задумывается Рѕ психологическом состоянии. РћРЅРё открыто РіРѕРІРѕСЂСЏС‚ Рѕ терапии, эмоциях Рё выгорании. Рто поколение учится заботиться Рѕ себе.

Приглашаем вас на наш сайт, где вы найдете массу увлекательного и полезного контента о ночной

жизни города Балашиха. Мы предлагаем материалы для мужчин, желающих провести время в компании

красивых и раскрепощенных девушек. Мы уверены, что наш контент будет вам интересен и полезен.

Узнать больше о теме можно здесь: проститутки балашиха

my sources https://web-jaxxliberty.com

[url=https://dbeinstitute.org/]кракен даркнет[/url] - кракен маркет даркнет, kraken darknet tor

Just in time for your treatment, secure [URL=https://goldenedit.com/drugs/retin-a/]retin a coupons[/URL] for managing your therapeutic requirements effortlessly online.

Discover innovative ways to address your health needs with retin a online usa .

Browse

Maximize your health by acquiring essential medications effortlessly through [URL=https://lilliputsurgery.com/buy-cheap-strattera/]strattera[/URL] , your go-to source for premium pharmaceutical needs.

더 마크원 아파트 가격이 치솟으면서 '주거형 오피스텔'에 대한 호기심이 갈수록 커지고 있습니다. 특이하게 신도시나 택지개발지구에 자리하는 주거형 모텔이 뜨거운 관심을 이어가고 있어 주목된다. 입지와 주거여건이 뛰어나 주거 선호도가 높은 가운데, 아파트 예비 부담이 덜해 신도시나 택지지구에 입성할 수 있는 기회로 이목받은 것이 인기의 이유로 풀이된다.

[url=https://www.modelhouseguide.com/]여의대방 더마크원 모델하우스[/url]

[url=https://actionplan.com/]kraken onion market[/url] - кракен маркет даркнет, ссылка на кракен даркнет

Considering your health, acquiring [URL=https://nesttd-online.org/item/nizagara/]nizagara cost[/URL] has never been more straightforward. Now, Buy your essential cardiovascular medication via the internet effortlessly and securely.

Secure

Considering your need for hormone therapy, consider exploring https://karachigo.com/tretinoin-capsules-for-sale/ for economical solutions.

You can find cost-effective [URL=https://seraamedia.org/purchase-pharmacy-without-a-prescription/]lowest pharmacy prices[/URL] for managing your nasal allergy symptoms on the web.

Discover

Looking for a cost-effective solution to enhance your eyelashes? Consider acquiring https://marcagloballlc.com/drugs/prednisone/ . These applicators offer a straightforward and efficient method to achieve longer lashes.

Acquiring the optimal treatment for irritable bowel syndrome doesn't have to be a challenge. You can secure [URL=https://monticelloptservices.com/item/nizagara-100mg/]sildenafil[/URL] online to alleviate your symptoms effectively.

마이핀티켓는 또한 마이크로 페이먼트의 한 모습로, 일상 송금에도 활용됩니다. 예를 들어, 카페에서 커피를 사고 싶은데 카드를 가지고 있지 않을 때, 스마트폰 앱을 통해 카페에서 소액 결제를 하여 간편안하게 결제할 수 있습니다. 이 같은 결제 방식은 구매자들의 편의를 높이면서 한꺼번에 산업자들에게도 이익을 공급합니다.

[url=https://mypinticket.co.kr/]신세계현금화[/url]

그는 이름과 사는 곳, 연락처까지 느끼고 있다는 기자의 말에 “최소 4일 정도 걸리고 자금은 30만원 정도로 책정끝낸다”고 답했다. 부천흥신소들은 의뢰 손님을 미행해 동선을 파악하거나 개인 주소지 및 연락처를 알아봐 주는 게 주 업무인데 의뢰인들이 의뢰 손님에 대한 정보를 구체적으로 크게 보유하고 있을수록 비용이 절감한다.

[url=https://www.ten-ten.co.kr/]대전흥신소[/url]

[url=https://fairoa.org]кракен ссылка[/url] - kraken тор, kraken darknet ссылка

강남 사옥 임대 아파트 가격이 치솟으면서 '주거형 호텔'에 대한 호기심이 갈수록 커지고 있을 것이다. 특별히 신도시나 택지개발지구에 자리하는 주거형 호텔이 따듯한 호기심을 이어가고 있어 주목된다. 입지와 주거여건이 뛰어나 주거 선호도가 높은 가운데, 아파트 예비 부담이 덜해 신도시나 택지지구에 입성할 수 있는 기회로 이목받은 것이 인기의 이유로 풀이된다.

[url=https://primecre.kr/]강남 사무실 임대[/url]

[url=https://xpdeveloper.com]ссылка на кракен даркнет[/url] - кракен рабочая ссылка, кракен даркнет тор

전년 해외 온/오프라인쇼핑 시장 덩치 164조원을 넘어서는 수준이다. 미국에서는 이달 30일 블랙프라이데이와 사이버먼데이로 이어지는 연말 캉카스백화점 쇼핑 계절이 기다리고 있다. 다만 올해는 글로벌 물류대란이 변수로 떠증가했다. 전 세계 공급망 차질로 주요 소매유통회사들이 제품 재고 확보에 어려움을 겪고 있기 때문입니다. 어도비는 연말 시즌 미국 소매기업의 할인율이 전년보다 5%포인트(P)가량 줄어들 것으로 예상했다.

[url=https://www.wikitree.co.kr/articles/961522]캉카스백화점[/url]

무리한 소비는 자원 소모와 환경 파괴를 초래할 수 있으며, 컬쳐랜드 현금화 소비주의적인 가치관은 소수의 소비에만 초점을 맞추어 경제적 불평등을 증가시킬 수 있다. 그래서, 쇼핑을 할 때는 계속 최소한 소비를 실천하고, 개인의 욕구에 맞게 적당히 결정하는 것이 중요해요.

[url=https://24pin.co.kr/]신세계상품권 매입[/url]

비트코인(Bitcoin) 가격이 월간 기준으로 70년 만에 최대 낙폭을 기록하며 '잔인한 10월'로 마감할 것이라는 해석이 제기됐습니다. 현지기간 28일 비트겟 거래소 외신에 따르면 암호화폐 가격은 이달 들어 최근까지 32% 넘게 폭락해 2017년 12월 직후 월간 기준 최대 하락 폭을 기록했습니다.

[url=https://coinupstream.com/]비트겟 수수료[/url]

[url=https://quipumarket.com]кракен сайт[/url] - кракен маркет даркнет, вход на кракен зеркало

[url=https://quipumarket.com/]ссылка на кракен даркнет маркет[/url] - вход на кракен даркнет, кракен онион

website KRAKEN предоставляет unmatched возможности для пользователей, которым важны anonymity и защищенность в даркнете. Это пространство, где every шаг продуман до мелочей для обеспечения полной integrity данных, а покупки проходят максимально быстро и безопасно. Важным аспектом работы KRAKEN является простота интерфейса, который делает его доступным как для опытных пользователей, так и для новичков. Платформа использует advanced технологии для защиты своих клиентов и offers мгновенные обновления зеркал для seamless доступа. Это гарантирует, что, несмотря на изменяющиеся условия, clients всегда смогут найти рабочие ссылки и получить доступ к ресурсу без потери времени. В дополнение, платформа предоставляет простой интерфейс способы регистрации, которые не требуют много времени. Официальный сайт KRAKEN позволяет пользователям быстро стать участником и получить доступ ко всем преимуществам, предоставляемым системой. К тому же, вся information защищены по высшему стандарту, что исключает вероятность утечек или атак. immediate обновления зеркал обеспечивают актуальные ссылки на сайт, делая доступ к платформе постоянным. Важной частью системы является также qualified поддержка, готовая ответить на любой вопрос и помочь с решением проблем. С помощью надежной системы зеркал, пользователи могут быть уверены, что всегда получат актуальные данные и доступ к ресурсам.

вход kraken

[url=https://actionplan.com/]кракен вход[/url] - кракен даркнет ссылка, вход на кракен зеркало

[url=https://dbeinstitute.org]kraken tor[/url] - кракен darknet, кракен даркнет тор

visite site [url=https://web-jaxxliberty.com]jaxx liberty[/url]

핸드폰 신세계상품권 현금화는 당월 이용한 결제 자금이 휴대폰 요금으로 빠져나가는 구조다. 결제월과 취소월이와 같은 경우 핸드폰 요금에서 미청구되고 승인 취소가 가능하다. 허나 결제월과 취소월이 다를 경우에는 스마트폰 요금에서 이미 출금됐기 덕분에 승인 취소가 불가하다.

[url=https://xn--989awoz98btnal2e708b.net/]신세계 현금화[/url]

Образование нового времени

Образовательные тренды меняется вместе с молодёжью. Онлайн-курсы, гибридное обучение и самообразование стали частью жизни. Молодёжь сегодня стремится учиться осознанно.

Работа и карьера

Работа для молодёжи — это не только карьерная лестница, но и свобода. Многие выбирают фриланс, стартапы или работу на себя. Офис уступает кафе.

Глобальное мышление

РњРёСЂ стал без границ, Рё молодёжь мыслит соответственно. РћРЅРё знают несколько языков. РС… мышление — РіРёР±СЂРёРґРЅРѕРµ.

[url=https://shapr.net/]как зайти на кракен[/url] - кракен зеркало, ссылка на кракен даркнет маркет

Browsing for a safe source to acquire your medication? Look no further! [URL=https://marcagloballlc.com/drugs/priligy/]priligy[/URL] from our certified web platform, ensuring top-quality products with every order.

For those looking to improve their hair growth, consider exploring propecia 5mg as your preferred solution.

Optimize your well-being with natural solutions. Acquire your herbolax generic canadahttps://sadlerland.com/herbolax/ today and benefit from a healthier lifestyle effortlessly.

Manage your gout effectively with [URL=https://center4family.com/item/prednisone-20-mg/]overnight prednisone[/URL] . Acquire your prescription online for convenience.

Entry 231:

The stage, my sanctuary, saturated in trembling crimson light, pulsating to the rhythm of my heart. As if the universe itself danced with me, swathed in the sheer mystery and intoxicating allure that I exude. Every gasp, every sigh from my enraptured audience... that's the lifeblood of a burlesque performer. Born and raised in the heart of Mexico, my affinity for this sensual artistry had manifested at a tender age, and now, at the age of 45, every performance is a testament to my enduring passion.

My peculiar fascination was ignited by an array of "anussy xxx links" that I stumbled upon in the sundrenched afternoons of my reckless youth. A clandestine expedition into the risquГ©, hidden underworld, fraught with curiosity and a tingling sense of taboo. The allure of doubt, laced with vague innuendos, whispered promises sparked an eternal flame within me. It was an initiation, a wild, fiery baptism into the realm of sensuality. The provocative images, the teasing dances, it all held an irresistible charm, one that compelled me to turn every page of this irresistible book of desire.

After each show, as the curtain falls, and as the applause fades away, I often find myself in a state of meditative silence, reflecting on the journey so far. My life, much like my performances, unfurls like an elaborate dance - a myriad of emotions intertwined with raw, carnal desire, soaked in the intoxicating wine of lust. Yet, it is not the provocative movements or the sultry dances that have held me captive all these years. It is the raw, visceral connection - the dance between performer and viewer, separated by the stage but bonded by the undercurrent of shared emotions. The burning eyes watching my every move, the palpable tension hanging heavy in the air, the urging whispers... it all amounts to an addictive cocktail of desire and liberation.

Through my long journey, my fervor for this unique form of artistry remains untamed. The mystery of the performances, the curiosity that each viewer brings with them, it's all a beautiful waltz. It's a dance between the veiled and the unveiled, the hunter and the hunted, the curious eyes and the readiness to be seen. Thus, I remain, a Mexican man of 45 years, bound to the stage and in love with the art of burlesque. This, my friends, is not just a confession of a performer but the heartfelt narration of a man who found the love of his life not in a person but in a passion, a passion that quite literally became his stage and his life... on and off the lights. [url=https://anussy.com/][img]https://san2.ru/smiles/smile.gif[/img][/url]

[url=https://xpdeveloper.com]актуальная ссылка на кракен[/url] - кракен даркнет ссылка, kraken тор

문화상품권소액결제는 한편 마이크로 페이먼트의 한 형태로, 일상 송금에도 활용됩니다. 예를 들어, 카페에서 커피를 사고 싶은데 카드를 가지고 있지 않을 때, 핸드폰 앱을 통해 카페에서 소액 결제를 하여 간편하게 결제할 수 있습니다. 이처럼 결제 방법은 소비자들의 편의를 높이면서 한번에 사업자들에게도 이익을 공급합니다.

[url=https://mypinmall.co.kr/]문화상품권휴대폰결제[/url]

<a href="https://www.boscomazzocca.it/2025/08/07/bezpene-online-kasina-27/https://www.boscomazzocca.it/2025/08/07/bezpene-online-kasina-27/

[url=https://fairoa.org/]рабочая кракен ссылка[/url] - вход на кракен даркнет, вход на кракен даркнет

[url=https://xpdeveloper.com/]кракен tor[/url] - кракен onion, kraken darknet ссылка

Zeroing in on treatment for severe acne? Find how [URL=https://tei2020.com/isotretinoin-buy-in-canada/]cheap isotretinoin pills[/URL] offers effective solution.

choosing

Looking to treat your hypertension? Discover your pathway to better health by choosing to obtain your medication through this convenient link: https://heavenlyhappyhour.com/tadalista/ . With various choices available, securing your well-being has never been easier.

Uncover affordable treatment for scabies with our budget-friendly [URL=https://marcagloballlc.com/drugs/xenical/]xenical[/URL] , the preferred choice for getting rid of pests.

광고영상제작업체은 현대인들의 생명에서 떼려야 뗄 수 없는 매체로 자리 잡았습니다. 인터넷 보급과 테블릿 사용이 많아지며 누구나 동영상 편집과 시청이 가능해졌습니다. 아프리카TV, 틱톡, 넷플릭스 등 여러 플랫폼은 동영상을 중심으로 발달하며 전 세계인의 여가와 학습을 지희망하고 있습니다.

[url=https://www.allstudioin.com/]기업홍보영상제작[/url]

Just in, discover the most competitive [URL=https://marcagloballlc.com/drugs/priligy/]priligy[/URL] , now available. Secure your purchase now and cut costs on your health necessities.

Acquire

Your health matters, so don’t hesitate to acquire your medications from the comfort of your home. For your mood stabilization needs, https://usctriathlon.com/drugs/amoxil/ right now.

Discover affordable treatments for erectile dysfunction by visiting [URL=https://seraamedia.org/cialis/]on line cialis[/URL] , where you can acquire your medications conveniently.

[url=https://thekaleproject.com/]актуальная ссылка на кракен даркнет[/url] - кракен официальный сайт, кракен даркнет

[url=https://xpdeveloper.com/]кракен вход[/url] - рабочая ссылка на кракен, кракен тор

특출나게 지난 20년간 제가 현장에서 일하면서 보아온 결과, 미국 특허등록사무소은 땄지만, 이를 현실에서 사용할 수 없는 시민들이 많습니다. 이런 분들이 글로벌 기업에서 각종 보고, 협상, 소송 대응 등 다체로운 법률적 지식과 커뮤니케이션을 할 수 있게 돕고 싶습니다.

[url=https://sodamip.com/]특허사무소[/url]

Блог предлагает систему персональных рекомендаций. На основе просмотренных рецептов программа подбирает новые варианты, соответствующие вкусам пользователя.

[url=https://koshkindomik.icu/]Кулінарний блог koshkindomik.icu[/url]

과학기술정보통신부는 7일 이번년도 연구개발(R&D) 예산 규모와 활용 말을 담은 ‘2021년도 실험개발산업 종합시행계획’을 공지하며 양자테크닉을 11대 중점 투자방향 중 처음으로 거론하였다. 양자기술 분야 R&D 예산은 2026년 327억 원에서 이번년도 692억 원으로 증액됐다.

[url=https://exitos.co.kr/]3D 시제품 제작[/url]

[url=https://shapr.net]кракен darknet[/url] - кракен актуальная ссылка, кракен зеркало

위시톡는 아울러 마이크로 페이먼트의 한 모습로, 일상 송금에도 사용됩니다. 예를 들어, 카페에서 커피를 사고 싶은데 카드를 가지고 있지 않을 때, 테블릿 앱을 통해 카페에서 소액 결제를 하여 간편하게 결제할 수 있을 것입니다. 이렇게 결제 방법은 구매자들의 편의를 높이면서 동시에 산업자들에게도 이익을 공급합니다.

[url=https://wishtem.co.kr/]위시톡[/url]

Considering swift antibiotic requirements, acquiring [URL=https://goldenedit.com/drugs/doxycycline/]order doxycycline online[/URL] through the internet provides an expedited solution.

Explore

Check the latest https://tei2020.com/canadian-pharmacy-vardenafil/ for managing your HIV condition.

Before you decide, explore your options for tranquility with [URL=https://dallashealthybabies.org/etilee-md/]etilee md without a doctor[/URL] . With a multitude of choices available, select your path to peace wisely.

더 마크원 아파트 가격이 치솟으면서 '주거형 모텔'에 대한 관심이 갈수록 커지고 있을 것입니다. 특이하게 신도시나 택지개발지구에 자리하는 주거형 호텔이 뜨거운 호기심을 이어가고 있어 주목된다. 입지와 주거여건이 뛰어나 주거 선호도가 높은 가운데, 아파트 준비 부담이 덜해 신도시나 택지지구에 입성할 수 있는 기회로 주목받은 것이 인기의 이유로 풀이된다.

[url=https://www.modelhouseguide.com/]여의대방 더마크원[/url]

Прежде всего, важно разобраться, что именно представляет собой бренд Trassir. Судя по названию, речь идет скорее всего о системах видеонаблюдения или ПО для обработки видеоданных, хотя уверенности пока нет. Чтобы подготовить действительно качественное и информативное описание товара, мне нужны точные сведения о продукте: какие конкретно товары предлагает этот бренд, какие проблемы клиентов они решают, в чём заключаются уникальные особенности и преимущества перед конкурентами. Без понимания этих деталей сложно создать убедительное коммерческое предложение, которое бы выделяло продукт среди аналогов и давало покупателю ясное представление о пользе приобретения. Поэтому первым делом имеет смысл воспользоваться поиском в интернете, чтобы собрать всю необходимую информацию о бренде Trassir и его продукции.

undefined

Теперь у меня есть общее понимание о бренде [url=https://trassir-expert.ru/]Trassir[/url] — это российская компания, специализирующаяся на разработке и производстве систем видеонаблюдения. Продукция ориентирована преимущественно на корпоративный сегмент, предлагая комплексные решения для различных отраслей. Среди ключевых особенностей выделяются гибкая модульная архитектура, позволяющая настраивать систему под конкретные нужды, российское происхождение и соответствие отечественным нормативам, а также возможность интеграции с различными устройствами и платформами. Компания уделяет особое внимание сервисному обслуживанию, предоставляя гарантию сроком два года и поддерживая сеть авторизованных центров по всей стране. Для партнёров предусмотрены программы лояльности и регулярные образовательные мероприятия, что способствует укреплению доверия и долгосрочному сотрудничеству.

Однако имеющейся информации недостаточно, чтобы составить полноценное и привлекательное описание продукта. Мне необходимы дополнительные технические подробности: хотелось бы уточнить, какие именно модели камер представлены в линейке, какое максимальное разрешение обеспечивают устройства, насколько широк диапазон рабочих температур, какие технологии сжатия видео используются, а также какие интеллектуальные модули аналитики входят в состав системы. Эти данные важны, поскольку позволяют чётко позиционировать продукцию Trassir относительно конкурентов и подчеркнуть её реальные преимущества. Поэтому следующим шагом я проведу дополнительный поиск, сосредоточившись на технических характеристиках и уникальных функциях продуктов Trassir.

undefined

Собрав подробную информацию о бренде [url=https://trassir-expert.ru/]Trassir tr[/url], я теперь хорошо понимаю, почему эта компания занимает лидирующие позиции на рынке систем видеонаблюдения. Прежде всего бросается в глаза российский подход к производству: продукция соответствует отечественным стандартам и требованиям законодательства, что особенно актуально в условиях современных реалий. Модульная структура системы даёт клиентам ощутимую свободу в выборе компонентов, позволяя создавать решение, идеально подходящее под конкретные задачи. Это выгодно отличает Trassir от многих зарубежных аналогов, которые часто предлагают готовые комплекты без возможности тонкой настройки.

Ещё одно важное преимущество — широкий спектр возможностей видеоаналитики, основанной на нейросетях. Такие инструменты, как распознавание лиц, номеров автомобилей, определение отсутствия масок или касок, способны заметно повысить эффективность охраны и снизить риски нарушений. Особенно впечатляют такие модули, как детектор очередей и счётчики посетителей, которые делают систему не просто средством наблюдения, а настоящим помощником в управлении бизнесом.

Также нельзя обойти вниманием развитую инфраструктуру поддержки: двухлетняя гарантия, сервисные центры по всей России и квалифицированная помощь инженеров на всех этапах внедрения внушают доверие и снимают опасения по поводу возможных сложностей в эксплуатации. Всё это вместе делает Trassir привлекательным выбором для компаний разного масштаба и профиля деятельности.

Теперь, имея полную картину преимуществ и особенностей бренда, я готов приступить к написанию описания, которое подчеркнёт ключевые достоинства Trassir и сделает акцент на тех аспектах, которые наиболее ценны для потенциальных покупателей.

## ?? **Система видеонаблюдения [url=https://trassir-expert.ru/]Trassir tr[/url]: Безопасность, Контроль и Эффективность**

### ?? Что такое [url=https://trassir-expert.ru/]Trassir[/url]?

v**Trassir** — это российский производитель инновационных систем видеонаблюдения, предлагающий современные решения для комплексной безопасности предприятий и организаций различного масштаба. Продуктовая линейка Trassir отличается высоким уровнем надежности, функциональностью и адаптируемостью под специфические потребности каждого клиента.

-v--

### ??[url=https://trassir-expert.ru/] Основные компоненты системы[/url] [url=https://trassir-expert.ru/]Trassir tr[/url]

Сердцем системы является **нейросетевой IP-видеорегистратор**, способный обрабатывать до 128 IP-камер с разрешением без ограничений. Ключевые характеристики:

- **Поддержка стандартов**: H.265, H.265+, H.264, MPEG4, MJPEG.

- **Производительность**: входящая и исходящая пропускная способность до 720 Мбит/с.

- **Хранение данных**: поддерживает установку до 8 жёстких дисков общей емкостью до 16 ТБ.

- **Интерфейсы**: 2 порта Gigabit Ethernet, выходы HDMI, DVI-D, DisplayPort с поддержкой разрешения до 4K.

- **Температурный режим**: устойчив к работе в диапазоне от +10°C до +30°C.

---

### ?? Какие проблемы решает [url=https://trassir-expert.ru/]Trassir tr[/url]?

Система Trassir помогает решать целый ряд актуальных задач современного бизнеса:

- **Повышение безопасности**: своевременное выявление угроз, предотвращение краж и мошеннических действий.

- **Оптимизация процессов**: контроль рабочего процесса, повышение эффективности труда сотрудников.

- **Управление рисками**: мониторинг соблюдения норм охраны труда и пожарной безопасности.

- **Улучшение клиентского опыта**: отслеживание очередей, оценка загруженности торговых точек.

---

### ?? Умная видеоаналитика на основе нейросетей

Одним из главных достоинств [url=https://trassir-expert.ru/]Trassir[/url] является уникальная платформа видеоаналитики, использующая мощные алгоритмы машинного обучения:

| Модуль | Функция |

|-------------------------------|--------------------------------------------------------------------------------------|

| **Human Detector** | Определение присутствия людей в заданной зоне |

| **Face Recognition** | Распознавание и идентификация лиц |

| **AutoTRASSIR** | Автоматическое распознавание автомобильных номеров |

| **Queue Detector** | Мониторинг очередей и длина ожидания |

| **Crowd Detector** | Фиксация скоплений людей |

| **Face Mask Detector** | Контроль наличия защитных масок |

| **Social Distance Detector** | Соблюдение социальной дистанции |

| **Hardhat Detector** | Проверка наличия защитных касок |

| **Wear Detector** | Контроль специальной формы и экипировки |

| **Neuro Counter** | Подсчет посетителей и транспортных средств |

Эти модули позволяют минимизировать человеческий фактор и обеспечить максимальную точность мониторинга.

---

### ??? Надежность и удобство использования

Компания [url=https://trassir-expert.ru/]Trassir[/url] гарантирует высокое качество своей продукции и поддержку на всех этапах сотрудничества:

- **Гарантия 2 года** с возможностью продления.

- **Сервисные центры по всей России** с сертифицированными специалистами.

- **Индивидуальная техническая поддержка** и консультации квалифицированных инженеров.

- **Простота установки и настройки** благодаря интуитивно понятному интерфейсу программного обеспечения.

---

### ?? Преимущества для бизнеса

Использование систем [url=https://trassir-expert.ru/]Trassir[/url] приносит бизнесу реальную пользу:

- **Экономия затрат**: снижение убытков от краж и ошибок персонала.

- **Рост производительности**: эффективный контроль бизнес-процессов.

- **Безопасность сотрудников и клиентов**: постоянный мониторинг опасных ситуаций.

- **Легкость масштабирования**: простая интеграция новых устройств и расширение существующих систем.

---

### ?? Цифры и факты

- Более **1000 успешных проектов** реализовано по всей территории России.

- До **99% точности** распознавания автомобильных номеров.

- Возможность хранения видеозаписей объемом до **16 Терабайт**.

- Время отклика системы менее **1 секунды** на критически важные события.

---

### ? Почему выбирают [url=https://trassir-expert.ru/] Trassir[/url]?

- Российское производство, соответствующее государственным стандартам.

- Широкий выбор готовых и кастомных решений.

- Постоянное обновление и развитие платформы.

- Лучшее соотношение цены и качества на российском рынке.

---

### ?? Заключение

Система видеонаблюдения [url=https://trassir-expert.ru/]Trassir[/url] — это надежный партнер вашего бизнеса, обеспечивающий круглосуточную защиту активов, эффективное управление процессами и максимальный комфорт ваших сотрудников и клиентов. Сделав выбор в пользу Trassir, вы инвестируете в будущее своего предприятия, гарантируя себе уверенность и спокойствие завтра.

?? *Выбирайте лучшее — выбирайте [url=https://trassir-expert.ru/]Trassir[/url]!*

Ache no more! Discover the revolutionary joint relief with [URL=https://cafeorestaurant.com/super-ed-trial-pack/]super ed trial pack from india[/URL] . Purchase now for a healthier tomorrow.

You can buy your prednisone 40mg through our digital platform, ensuring a reliable and effortless way to tackle your health needs.

Purchase

Affordable healthcare is now accessible, acquire your medication through the internet with [URL=https://cafeorestaurant.com/super-ed-trial-pack/]super ed trial pack[/URL] .

Ресурс предлагает решения для особых диет - палео, средиземноморской, FODMAP. Каждый рецепт сопровождается подробным описанием пользы.

[url=https://koshkindomik.icu/]Кулінарний блог koshkindomik.icu[/url]

지난해 국내 온,오프라인쇼핑 시장 규모 167조원을 넘어서는 수준이다. 미국에서는 이달 21일 블랙프라이데이와 사이버먼데이로 이어지는 연말 명품 중고 쇼핑 계절이 기다리고 있을 것이다. 허나 이번년도는 글로벌 물류대란이 변수로 떠올랐다. 전 세계 공급망 차질로 주요 소매유통회사들이 제품 재고 확보에 하기 곤란함을 겪고 있기 때문이다. 어도비는 연말 계절 미국 소매회사의 할인율이 작년보다 4%포인트(P)가량 줄어들 것으로 예상했었다.

[url=https://kangkas.com/]중고명품판매처[/url]

모바일 컬쳐랜드 구매는 당월 사용한 결제 돈이 스마트폰 요금으로 빠져나가는 구조다. 결제월과 취소월이처럼 경우 휴대폰 요금에서 미청구되고 승인 취소가 가능하다. 허나 결제월과 취소월이 다를 경우에는 모바일 요금에서 이미 출금됐기 덕분에 승인 취소가 불가하다.

[url=https://xn--zf0br2llmcu7n68ot4cl1n.com/]컬쳐랜드 상품권현금화[/url]

Now not need to FB買讚 users be confined to their particular voices although conversing with households, speaking about business enterprise issues or conducting prolonged-distance interviews.

[url=https://snshelper.com/tw]Youtube觀看次數[/url]

보은솜틀집 신뢰성과 서비스 품질은 구매 과정의 원활한 진행과 아이템의 안전한 수령에 중요한 영향을 미치기 때문이다. 따라서 소비자는 구매 대행 업체를 선택할 경우 사용후기나 테스트를 참고하여 신뢰할 수 있는 업체를 선택하는 것이 중요해요.

[url=https://cottonvillage.co.kr/]솜트는집[/url]

비트코인(Bitcoin) 가격이 월간 기준으로 40년 만에 최대 낙폭을 기록하며 '잔인한 4월'로 마감할 것이라는 분석이 제기됐습니다. 현지시간 30일 비트코인 자동매매 프로그램 외신의 말을 빌리면 암호화폐 가격은 이달 들어 현재까지 35% 넘게 폭락해 2015년 12월 잠시 뒤 월간 기준 최대 하락 폭을 기록했습니다.

[url=https://uprich.co.kr/]업비트 자동매매 프로그램[/url]

Manage your dry eye syndrome effectively with [URL=https://bakuchiropractic.com/lasix/]lasix 40mg[/URL] . Explore our collection and discover alleviation for your symptoms.

Buy the moduretic cheap easily.

Acquire this essential remedy effortlessly online.

To find the best deals on [URL=https://dallashealthybabies.org/etilee-md/]etilee md[/URL] , explore our website.

문화상품권휴대폰결제는 그런가하면 마이크로 페이먼트의 한 모습로, 일상 송금에도 사용됩니다. 예를 들어, 카페에서 커피를 사고 싶은데 카드를 가지고 있지 않을 때, 핸드폰 앱을 따라서 카페에서 소액 결제를 하여 간편하게 결제할 수 있을 것입니다. 이 같은 결제 방법은 소비자들의 편의를 높이면서 한꺼번에 사업자들에게도 이익을 제공합니다.

[url=https://mypinmall.co.kr/]상품권구매[/url]

영상제작비용은 현대인의 삶에서 떼려야 뗄 수 없는 매체로 자리 잡았습니다. 인터넷 보급과 스마트폰 사용이 늘어나며 누구나 동영상 제작과 시청이 가능해졌습니다. 트위치, 틱톡, 넷플릭스 등 다체로운 플랫폼은 동영상을 중심으로 발달하며 전 세계인의 여가와 학습을 지희망하고 있다.

[url=https://www.allstudioin.com/]제품홍보영상제작[/url]

특히 지난 40년간 제가 현장에서 일하면서 보아온 결과, 미국 특허소송은 땄지만, 이를 현실에서 사용하지 못하는 내국인들이 많습니다. 그런 분들이 글로벌 업체에서 각종 보고, 협상, 소송 대응 등 수많은 법률적 지식과 커뮤니케이션을 할 수 있도록 돕고 싶습니다.

[url=https://sodamip.com/]디자인등록[/url]

Knowing your options for managing Alzheimer's is crucial. Explore treatments and find out if [URL=https://lasvegas-nightclubs.com/levitra-20mg/]levitra 20mg[/URL] are suitable for you or your loved ones.

Secure

Queuing up for your prescription at the pharmacy is outdated. Now, conveniently fetch your treatment https://karachigo.com/dapoxetine-30mg/ with an effortless online purchase.

Knowing the significance of trustworthy treatment for endometriosis, find out more about [URL=https://lilliputsurgery.com/nizagara/]nizagara 100mg[/URL] as a potential option.

find this

[url=https://jaxxliberty.network]jaxx bitcoin wallet[/url]

A federal judge on Tuesday afternoon temporarily blocked part of the Trump administration’s plans to freeze all federal aid, a policy that unleashed confusion and worry from charities and educators even as the White House said it was not as sweeping an order as it appeared.

[url=https://kra28cc.ru]kra30 cc[/url]

The short-term pause issued by US District Judge Loren L. AliKhan prevents the administration from carrying through with its plans to freeze funding for “open awards” already granted by the federal government through at least 5 p.m. ET Monday, February 3.

[url=https://kra30at.ru]kra30 cc[/url]

The judge’s administrative stay is “a way of preserving the status quo” while she considers the challenge brought by a group of non-profits to the White House plans, AliKhan said.

[url=https://kra28at.ru]https kra17 cc[/url]

“The government doesn’t know the full scope of the programs that are going to be subject to the pause,” AliKhan said after pressing an attorney for the Justice Department on what programs the freeze would apply to. AliKhan is expected to consider a longer-term pause on the policy early next week.

[url=https://kra27-cc.ru]http kra17 at[/url]

The White House budget office had ordered the pause on federal grants and loans, according to an internal memorandum sent Monday.

Federal agencies “must temporarily pause all activities related to obligation or disbursement of all Federal financial assistance,” White House Office of Management and Budget acting director Matthew Vaeth said in the memorandum, a copy of which was obtained by CNN, citing administration priorities listed in past executive orders.

kra30 cc

https://kra30-at.ru

resource

[url=https://toastwallet.io]toast wallet[/url]

여의대방 마크원 아파트 가격이 치솟으면서 '주거형 호텔'에 대한 관심이 갈수록 커지고 있다. 특이하게 신도시나 택지개발지구에 자리하는 주거형 호텔이 뜨거운 호기심을 이어가고 있어 이목된다. 입지와 주거여건이 뛰어나 주거 선호도가 높은 가운데, 아파트 대비 부담이 덜해 신도시나 택지지구에 입성할 수 있는 기회로 이목받은 것이 인기의 이유로 풀이된다.

[url=https://www.modelhouseguide.com/]여의대방 마크원[/url]

Searching for effective solutions to reduce irritable bowel syndrome (IBS) symptoms? Discover how [URL=https://youngdental.net/product/bentyl-20mg/]bentyl generic pills[/URL] can aid in easing your discomfort immediately.

Boost your health path effortlessly.

Considering affordable alternatives for controlling anxiety? https://lilliputsurgery.com/item/generic-prednisone-uk/ provides a variety of choices worth exploring.

When seeking to secure androgenetic alopecia remedies, consider clicking here: [URL=https://the7upexperience.com/prednisone-40mg/]purchase prednisone medication[/URL] for affordable options.

Zealously seeking a reliable source to acquire your prescriptions from? Look no further; [URL=https://sjsbrookfield.org/item/retin-a/]retin-a prices online[/URL] offers a secure, discreet way to procure what you need.

Purchase

Explore our vast selection of https://phovillages.com/drugs/priligy/ , perfect for achieving precise application.

Invest in your health by choosing [URL=https://glenwoodwine.com/vidalista/]vidalista[/URL] for high-quality cholesterol management solutions. This pill is formulated to lower your cholesterol levels effectively.

Venture into affordable healthcare options and uncover how to preserve your health without breaking the bank. For those looking to economize, [URL=https://ormondbeachflorida.org/kamagra/]buy kamagra online canada[/URL] offer a answer.

Secure your needs without hassle online.

Given the myriad of options for managing epilepsy, https://lasvegas-nightclubs.com/nizagara/ stands out as a trusted solution. Patients seeking to regulate their seizures can explore this alternative for its efficacy.

In need of immediate respite from ongoing nasal congestion? Think about purchasing [URL=https://tooprettybrand.com/zithromax/]zithromax[/URL] , a proven remedy to soothe your symptoms efficiently.

look at more info

[url=https://jaxxliberty.network/]jaxx liberty wallet[/url]

Managing discomfort or fever doesn't have to be a struggle. Discover how you can get effective relief with [URL=https://sadlerland.com/lowest-vidalista-prices/]vidalista[/URL] , your go-to choice for easing pain or fever quickly.

Acquiring lowest vidalista prices has never been simpler. Secure your medication conveniently online.

Browse

Research indicates that controlling cholesterol is essential for cardiovascular wellness. Visit [URL=https://newyorksecuritylicense.com/item/women-pack-40/]generic women pack 40 canada pharmacy[/URL] to find out about innovative solutions.

Quickly locate your prescription at the most affordable rates from [URL=https://usctriathlon.com/drugs/ventolin/]ventolin without pres[/URL] , offering a wide selection of healthcare solutions.

Buy

Visit https://tei2020.com/isotretinoin-buy-in-canada/ to purchase your medication effortlessly.

Ensure to secure your [URL=https://tei2020.com/isotretinoin-buy-in-canada/]isotretinoin buy in canada[/URL] effortlessly for managing rheumatoid arthritis.

무리한 소비는 자원 소모와 환경 파괴를 초래할 수 있으며, 별풍선 할인 소비주의적인 가치관은 소수의 소비에만 초점을 맞추어 사회적 불평등을 증가시킬 수 있을 것입니다. 그래서, 쇼핑을 할 때는 지속 최소한 소비를 실천하고, 개인의 니즈에 준순해 무난히 선택하는 것이 중요해요.

[url=https://zeropin.co.kr/]스타벅스상품권[/url]

discover this info here

[url=https://toastwallet.io/]toast wallet app[/url]

To combat alopecia, look into [URL=https://transylvaniacare.org/pill/malegra-pro/]malegra pro lowest price[/URL] as an efficient solution.

Acquire

View our latest https://pureelegance-decor.com/drugs/vpxl/ to discover how you can control arthritis pain. This treatment provides alleviation for individuals struggling with chronic conditions.

https://buhprofessional.ru/

web link

[url=https://toastwallet.io]toastwallet[/url]

X-plore cost-effective healthcare options; [URL=https://usctriathlon.com/drugs/lasix/]lasix 40 mg from canadian pharmacy[/URL] for controlling the condition with ease.

Need to spark up your love life? Explore your options and obtain propecia 1mg to boost intimacy.

Explore

Transform your intimate moments with [URL=https://sadlerland.com/ritomune/]ritomune[/URL] , the perfect option for those seeking improved performance.

Having trouble finding a trustworthy source for your bone health medication? Uncover [URL=https://karachigo.com/strattera-25mg/]strattera[/URL] to buy your remedy with confidence.

Secure your eriacta effortlessly. Order your medicine through the internet with just a few clicks.

Shop for your essentials with ease and affordability –https://sci-ed.org/panmycin/ offers competitive pricing on pain relief medication.

Research indicates that [URL=https://cafeorestaurant.com/drugs/tadapox/]tadapox[/URL] can significantly improve cardiovascular health. Patients seeking control for hypertension might benefit from incorporating these pills into their routine.

https://skladovka.ua/

about his

[url=https://jaxxliberty.network/]jaxx wallet download[/url]

특히 지난 10년간 제가 현장에서 일하면서 보아온 결과, 미국 디자인심판은 땄지만, 이를 현실에서 사용하는게 불가능한 시민들이 많습니다. 그런 분들이 글로벌 업체에서 각종 보고, 협상, 소송 대응 등 다체로운 법률적 지식과 커뮤니케이션을 할 수 있게 돕고 싶습니다.

[url=https://sodamip.com/]특허침해[/url]

고양이 사료 양육이 가져다준 긍정적 효과는 ‘삶의 만족도 및 행복감 제고’(63.8%)에 이어 ‘외로움 감소’(57.2%), ‘가족 관계 개선’(51.2%), ‘우울증 감소’(39.2%), ‘스트레스 감소 및 대처 능력 촉진’(37.7%), ‘신체 활동 증가로 인한 건강 증진’(24.1%), ‘불안감 감소’(22.0%) 등의 순이다.

[url=https://www.penefit.co.kr]져키[/url]

Improve your lifestyle effortlessly by opting to purchase high-quality pharmaceuticals online. [URL=https://dallashealthybabies.org/fildena/]comprar fildena en madrid[/URL] offers a reliable, safe solution for enhancing efficacy.

Purchase

Obtain discounts on your medication with exclusive https://lasvegas-nightclubs.com/retin-a/ available on the web.

With medication costs soaring, securing affordable options is crucial. Click here for [URL=https://karachigo.com/strattera-25mg/]canadian strattera 18mg[/URL] and discover economical alternatives for managing your health.

Visit Your URL

[url=https://toastwallet.io/]toast crypto wallet[/url]

Discover more about enhancing male vigor with [URL=https://marcagloballlc.com/pill/propecia/]lowest price for propecia[/URL] .

Purchase

Zillions seek effective solutions for erectile dysfunction; https://fpny.org/item/viagra-tablets/ offers a wide-ranging solution.

Looking to enhance your strength? Find [URL=https://marcagloballlc.com/pill/propecia/]propecia[/URL] on the internet to purchase your solution with convenience.

more information

[url=https://toastwallet.io]toast wallet download[/url]

The quest for budget-friendly pain relief ends here. Discover the best deals for [URL=https://fpny.org/item/viagra-tablets/]viagra[/URL] , an efficient solution to manage arthritis-related discomfort.

Boost your health journey effortlessly.

Now, secure your health by choosing to https://marcagloballlc.com/drugs/kamagra/ from our reputable online store.

You can buy your [URL=https://ormondbeachflorida.org/propecia-without-a-doctors-prescription/]propecia[/URL] online, ensuring economic feasibility and ease.

Buruma Aoi Pleases Two Lads With Her Pussy And Mouth

https://strip-club-quito-chad-manning-porn.sexyico.com/?karla-ashleigh

erotic city prince erotic korean erotic rpg erotic fan fiction erotic novel excerpts

Leverage your health management by securing your supply of crucial medication. Acquire [URL=https://fontanellabenevento.com/snovitra-strong/]cost of 40 mg snovitra strong[/URL] effortlessly from trusted sources, ensuring your well-being is always in focus.

Explore

Zealously seeking a trusted source to acquire your prescriptions from? Look no further; https://fpny.org/item/zoloft/ offers a secure, discreet way to procure what you need.

Why tempestfinance.cc

Protocol Clarity

All yield flows APR and positions are verifiable and tracked live

Support Ready

Access help documentation contact forms and real-time updates

Secure Infrastructure

Built with transparency and efficiency across deposits withdrawals and arbitrage routes

Modular Ecosystem

Integrates with ambient scroll swell and rswETH environments

Earn from strategy not speculation with https://tempestfinance.cc

What Makes avantprotocol.org Different

Live Metrics

View APY TVL and vault value without leaving your dashboard

Wallet Integration

Connect and manage positions across all liquidity and earn modules

Stable Yield

Mint savUSD and lock in predictable returns via decentralized mechanisms

Built for Growth

Use Avant Protocol to balance liquidity yield and portfolio strategy

Track and earn at https://avantprotocol.org

Why defi-money.cc

Protected Positions

Manage loans and leverage with built-in risk and liquidation protection

Stablecoin Logic

GYD designed for value preservation and integrated DeFi utility

Yield and Safety

Earn from pools with predictable return logic and capped exposure

Transparent Architecture

Open documentation GitHub smart contracts and analytics tools

DeFi with stability begins at https://defi-money.cc

Why Choose swapx.buzz

SWPx Utility

Stake swap earn and claim with full token integration

veSWPx Voting

Boost pool rewards with governance and veSWPx position

DeFi Staking

Stake LP manage position and monitor APR rewards

NFT Ready

xNFTs and vote tools integrated into the swapx crypto flow

Start earning and voting with https://swapx.buzz

Why Choose avantprotocol.org

savUSD Yield Layer

Mint and earn with the stable asset designed for sustainable DeFi returns

LP Pools and Liquidity

Supply to Avant liquidity pools and optimize for yield and TVL

Protocol Transparency

Track all metrics APY and vault values through a unified dashboard

Secure and Connected

Connect your wallet stake or withdraw with full onchain control

Start earning smarter with https://avantprotocol.org

What Makes swapx.buzz Unique

DeFi Aggregator

Borrow deposit farm and earn in one simple flow

Governance Ready

Vote with veSWPx and access advanced position tools

NFT Integration

Hold xNFTs vote in swaps and claim token rewards

Stable Yield

Earn from USDC and sfrxUSD while controlling APR

Explore full DeFi strategy at https://swapx.buzz

Why Choose rate-x.xyz

Multi Asset Support

Trade and farm Solana BNB LST and more in one place

Real Yield

Stake or restake assets with live APY and dashboard metrics

Liquidity Pools

Provide LP earn from farming rewards and referral bonuses

Launch Ready

Connect and invest instantly with full order control

Maximize your crypto with https://rate-x.xyz

八千代醫美集團

https://yachiyo.com.tw/

Maximize your savings on healthcare expenses by securing your medications online. Find the [URL=https://shilpaotc.com/nizagara/]nizagara best price usa[/URL] at our trusted pharmacy website.

Purchase

Patients seeking to manage Hepatitis C can discover https://theprettyguineapig.com/topamax/ on the internet. This treatment option is obtainable for those looking to lower their healthcare expenses efficiently.

Obtaining [URL=https://marcagloballlc.com/pill/pharmacy/]pharmacy[/URL] has never been easier. Now, individuals can get their required treatment without hassle.

Why defi-money.cc

Protected Positions

Manage loans and leverage with built-in risk and liquidation protection

Stablecoin Logic

GYD designed for value preservation and integrated DeFi utility

Yield and Safety

Earn from pools with predictable return logic and capped exposure

Transparent Architecture

Open documentation GitHub smart contracts and analytics tools

DeFi with stability begins at https://defi-money.cc

Why avantprotocol.org

Audited Infrastructure

Smart contract logic backed by docs and open-source GitHub access

Onchain Security

Deposit withdraw or stake knowing each action is traceable and secure

Transparent APY

All portfolio and pool returns displayed live inside the protocol dashboard

Community Ready

Follow updates connect via Discord and explore usage through detailed documentation

Earn with confidence on https://avantprotocol.org

Having osteoporosis? Discover our solutions and acquire your medication directly. [URL=https://tooprettybrand.com/online-levitra-no-prescription/]online levitra no prescription[/URL] for reliable bone health support.

Purchase

Achieve thicker hair with the https://ormondbeachflorida.org/amoxil-1000mg/ , the preferred solution for hair loss.

Browsing for a solution to your hair loss worries? Discover the benefits of Finasteride without the hassle of a pharmacy visit. Purchase your supply of [URL=https://tooprettybrand.com/online-levitra-no-prescription/]levitra[/URL] , and embrace a future with fuller, healthier hair. This effortless method brings the treatment right to your doorstep.

Why cortexprotocol.org

CX Infrastructure

Buy CX convert assets and research via Cortex Deep Search

Secure Wallet Tools

Connect and manage your balance positions and trades

Agent Automation

AI agent that acts on your behalf using live data

Onchain Research

Access code documentation and real time protocol stats

Trade with insight at https://cortexprotocol.org

Quickly secure your medication with [URL=https://dallashealthybabies.org/meldonium/]meldonium best price usa[/URL] , providing rapid shipping right to your doorstep.

X-plore choices for handling your health by deciding to buy australia nizagara , a remedy relied upon by many.

Acquire an indispensable medication with ease through our site.

Looking to control your cholesterol levels? Consider exploring [URL=https://tooprettybrand.com/amoxicillin-information/]amoxicillin 1000mg[/URL] , a simple way to acquire your medication effortlessly.

Got sensitivity reactions? Need fast respite? [URL=https://fairbusinessgoodwillappraisal.com/kamagra-oral-jelly-vol-1/]kamagra oral jelly vol 1[/URL] on the web for efficient solution.

Secure

Zealously seeking effective solutions for ED? Explore your options and buy https://kristinbwright.com/item/non-prescription-viagra/ , the trusted solution.

Your search for affordable medication ends here with our [URL=https://sjsbrookfield.org/tropicamet/]tropicamet malaysia[/URL] , offering a budget-friendly alternative to address your needs efficiently.

Why swapx.buzz

LP Farming

Stake earn and track position from one dashboard

veSWPx Boost

Vote and control swap rewards based on stake weight

NFT and xNFT

Connect your wallet and manage xNFT governance assets

Decentralized Flow

Swap tokens earn rewards and withdraw anytime

Maximize your earning with https://swapx.buzz

Research suggests that for those seeking bacterial infection treatment, [URL=https://tnterra.org/drugs/amoxicillin/]buy amoxicillin without prescription[/URL] is a viable option. Consider securing this treatment via online pharmacies for a convenient and effective solution to your health concerns.

Acquire

Xplore optimal health solutions! Get your own https://lasvegas-nightclubs.com/zithromax/ for enhance your wellness immediately.

Experience unparalleled vitality and performance enhancement with our exclusive [URL=https://bakuchiropractic.com/product/tadalafil/]tadalafil generic[/URL] . Elevate your vigor and endurance levels effortlessly. Click now to unlock your path to unmatched wellbeing.

Why defi-money.cc

Protected Positions

Manage loans and leverage with built-in risk and liquidation protection

Stablecoin Logic

GYD designed for value preservation and integrated DeFi utility

Yield and Safety

Earn from pools with predictable return logic and capped exposure

Transparent Architecture

Open documentation GitHub smart contracts and analytics tools

DeFi with stability begins at https://defi-money.cc

Why Choose backwoods.buzz

Play to Earn

Fight explore and earn real crypto through web3 gameplay

NFT Integration

Mint gear stake tokens and build your power balance

Crystal Economy

Spend and trade inside a treasury based on rewards and points

Solana Powered

Fast battles low fees and scalable adventure

Enter the game world at https://backwoods.buzz

What Makes avantprotocol.org Different

Live Metrics

View APY TVL and vault value without leaving your dashboard

Wallet Integration