By Dr. Lamia Alam with Dr. Shannon Cole.

Imagine a scenario where an artificially intelligent (AI) diagnostic system can not only deliver complex diagnoses but can also articulate and communicate the reasoning behind its decisions in a way that both patients and physicians can grasp with ease. Intriguing, isn't it?

As AI is emerging as a beacon of hope in healthcare, a 2021 study by Drs. Lamia Alam and Shane Mueller shed light on how the ability of AI to communicate a medical rationale could play out in real-world applications. This timely work suggests that the success of medical AI systems hinges on both 1) the ability of medical AI to deliver precise diagnoses and on 2) its capacity to provide meaningful explanations for those diagnoses. Critically, for a medical AI to succeed, it needs to be capable of helping people make sense of their health circumstances and what they can do about them.

Can You See What I Am Saying?: Trust and Satisfaction in Medical AI Comes from Visualizations and Explanations

In the study, researchers conducted two simulation experiments to evaluate the effectiveness of explanations in AI diagnostic systems. First, they, they explored the effectiveness of global explanations (“how the AI usually makes decisions?” questions) vs local (“why the AI makes a particular decision?” questions) explanations compared to no explanation.

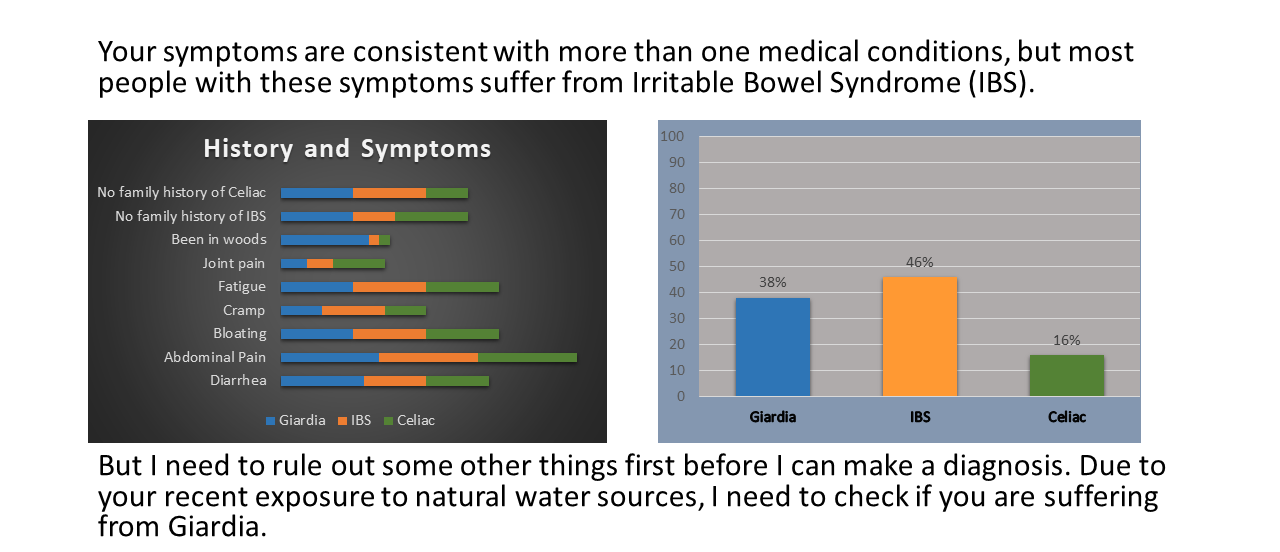

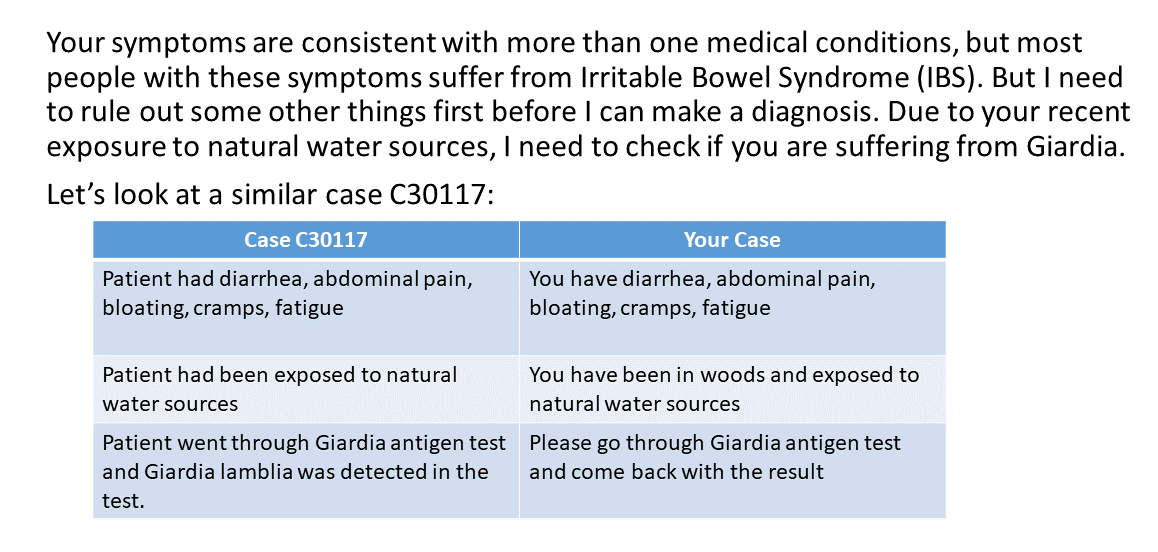

In the second experiment, researchers examined the effectiveness of probability-based graphics and examples (see Example 1) as forms of explanations and compared their effect on patient satisfaction and trust to text-based rationales (see Example 2). The experimenters explained the most likely diagnosis by the AI and the examples were of similar cases handled by previously the AI. The researchers discovered that explanations and the type of explanations wield a significant influence on patient satisfaction and trust; especially during critical re-diagnosis periods. These are precious moments, where richer explanations, such as those supplemented with visualizations and examples, are most effective at evoking trust in the AI's decisions.

The study also unearthed some nuanced features that are especially insightful to AI development. For example, the impact of explanations were found to be time-sensitive, with variable impact on user trust and satisfaction depending on the context (level of patient condition acuity, diagnosis, etc.) and timing of explanation delivery. Explanations were more effective during critical situations, when patients were re-diagnosed and the AI had to change to a new, more accurate its diagnosis. These are critical situations in which users need more clarity about decisions because an AI has failed to provide a useful solution. Because the medical AI augmented diagnoses by using graphics or example-based explanations about why it reached a different conclusion, patients felt secure in the new information. This suggests that AI developers must be extremely judicious in both when and how they present explanations to users; avoiding unnecessary information overload and subsequent confusion when the user may not need a more detailed explanation. Moreover, the medium of explanation matters—a lot. Catering to diverse learning styles and preferences among users helps patients to get important information in a palatable format that they can connect with, digest, and understand. The information-rich visual and example-based explanations emerged as clear winners, outperforming simple written logic-based text explanations. Further, these findings show that clarity corresponds to trust: it is important to into consideration the emotions evoked when patients are attempting to understand new information about their potentially dire medical situation.

What Does XAI Mean for the Future of Medicine?: A Call for Regulating XAI Implementation

Collectively, this work offers valuable insights into how AI systems can be fine-tuned to better serve both patients and healthcare professionals. Downstream, a clearer understanding of a medical AIs decision-making yields many benefits: it enhances patient and provider understanding of their own health, symptomology, disease, treatments, and why treatments are important. It is clear that AIs need be designed to utilize easy to understand graphics, charts, and examples to explain the differential diagnosis (a systematic method used by doctors to identify a disease or condition by considering and narrowing down a list of potential causes of a patient's symptoms through various diagnostic technique) it is considering, what is most likely, and what is less likely for the user.

For example, medical AIs can also present previous cases to illustrate similarities or contrasts with the user’s condition, as simply stating in plain text may not sufficiently convince a user of the reasoning behind the AI’s decision-making approach. Imagine if a medical AI was capable of explaining its decision-making process, the details of a patients’ health information, symptoms, diagnosis, and reasoning and importance of adherence to treatment? What if it was capable of explaining the diagnosis and proposed treatment to calm patient fears or anger about their health status or what will likely happen in the future? It is inherently calming when people can make sense of where they are and what needs to happen. Consequentially, engendering understanding of conditions and circumstances will lead to better treatment compliance and empowers patients to self-advocate through informed care. From the provider side: a well-designed medical XAI could reduce considerable burden of explanation, conflict or emotional labor in delivering bad news.

By intentionally making the integration of explanation features into AI systems a top priority and repackaging the delivery of explanations, developers can bolster user trust, satisfaction, and confidence in these transformative technologies. Moreover, by harnessing the power of explanations that connect to people, AI has the potential to not only revolutionize medical diagnosis but also to foster a deeper sense of trust and collaboration between man and machine. This research points us in the direction of a more patient-centric approach to AI development; one that prioritizes transparency, usability, and efficacy. If people can make a sense of what happens behind the ‘black box’, how an AI works or why and when it may fail, they will eventually be better able to accept AI-derived decisions.

Additionally, this research highlights the urgent need for legislation and regulations requiring medical AIs to have better explanatory power as a core feature. Medical AIs need to be able to provide a clear rationale to both clinicians and patients behind medical recommendations, and these XAIs need be created with medical decision-makers in the development loop. Further, medical AIs need to offer explanations that are inclusive of diverse learning styles, languages or cultural norms to ensure equitable healthcare delivery, adherence to treatment, and to equip patients to self-advocate through informed care.

In conclusion, Alam and Mueller's study serves as a guiding light in the ever-evolving landscape of healthcare AI. As we navigate the complexities of integrating technology into medicine, let us not forget the profound impact that a simple explanation can have on patient outcomes and patient experiences. After all, in the quest for better health, clarity is key and XAI is poised to illuminate the path ahead.

About the authors:

Dr. Lamia Alam is a postdoctoral fellow at Johns Hopkins Armstrong Institute for Patient Safety and Quality. Her research interests include AI in healthcare, human-AI teaming, human factors and system engineering solutions in high-risk healthcare environments.

Dr. Shannon Cole is the Senior Medical Writer and Editor at The Armstrong Institute for Patient Safety and Quality at Johns Hopkins Medicine. They facilitate organizational communication and dissemination efforts between research and clinical operations, including leading the "AI on AI" series.

The opinions expressed here are those of the authors and do not necessarily reflect those of The Johns Hopkins University.