By Dr. Lamia Alam with Dr. Shannon Cole.

Imagine a scenario where an artificially intelligent (AI) diagnostic system can not only deliver complex diagnoses but can also articulate and communicate the reasoning behind its decisions in a way that both patients and physicians can grasp with ease. Intriguing, isn't it?

As AI is emerging as a beacon of hope in healthcare, a 2021 study by Drs. Lamia Alam and Shane Mueller shed light on how the ability of AI to communicate a medical rationale could play out in real-world applications. This timely work suggests that the success of medical AI systems hinges on both 1) the ability of medical AI to deliver precise diagnoses and on 2) its capacity to provide meaningful explanations for those diagnoses. Critically, for a medical AI to succeed, it needs to be capable of helping people make sense of their health circumstances and what they can do about them.

Can You See What I Am Saying?: Trust and Satisfaction in Medical AI Comes from Visualizations and Explanations

In the study, researchers conducted two simulation experiments to evaluate the effectiveness of explanations in AI diagnostic systems. First, they, they explored the effectiveness of global explanations (“how the AI usually makes decisions?” questions) vs local (“why the AI makes a particular decision?” questions) explanations compared to no explanation.

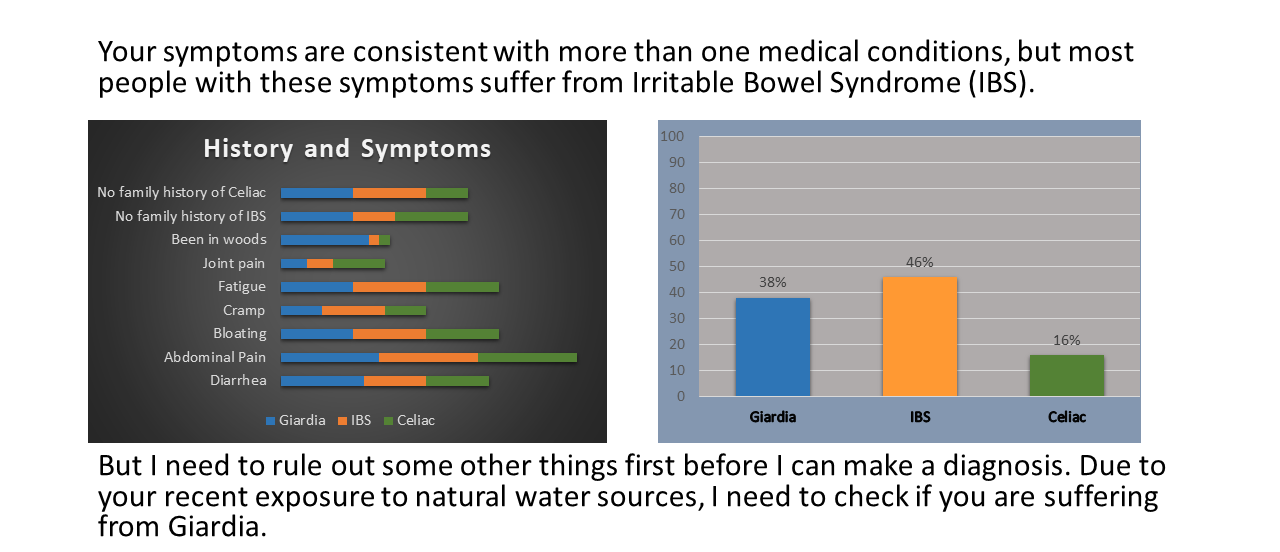

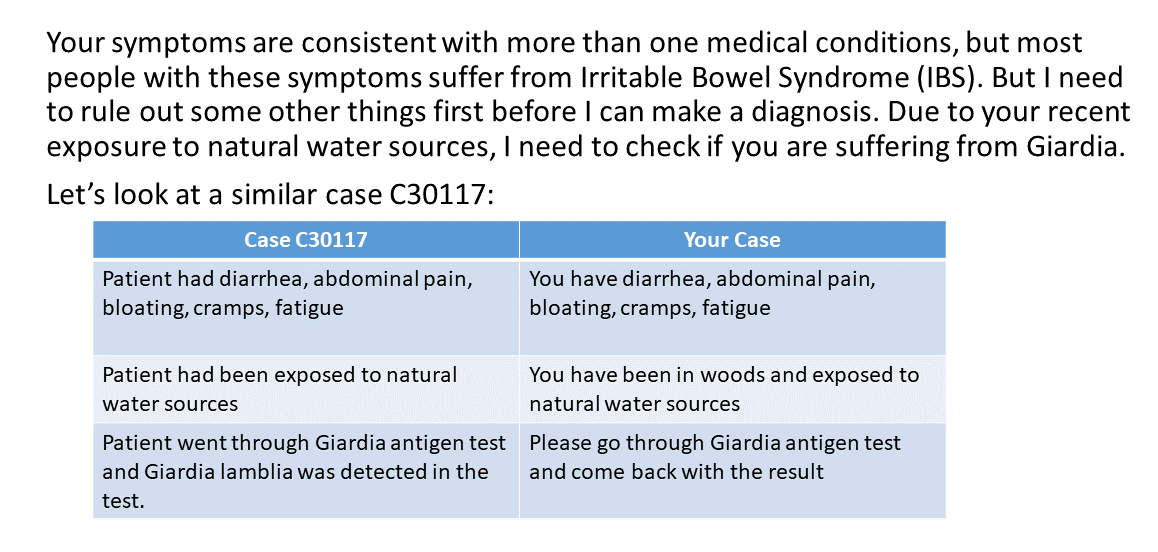

In the second experiment, researchers examined the effectiveness of probability-based graphics and examples (see Example 1) as forms of explanations and compared their effect on patient satisfaction and trust to text-based rationales (see Example 2). The experimenters explained the most likely diagnosis by the AI and the examples were of similar cases handled by previously the AI. The researchers discovered that explanations and the type of explanations wield a significant influence on patient satisfaction and trust; especially during critical re-diagnosis periods. These are precious moments, where richer explanations, such as those supplemented with visualizations and examples, are most effective at evoking trust in the AI's decisions.

The study also unearthed some nuanced features that are especially insightful to AI development. For example, the impact of explanations were found to be time-sensitive, with variable impact on user trust and satisfaction depending on the context (level of patient condition acuity, diagnosis, etc.) and timing of explanation delivery. Explanations were more effective during critical situations, when patients were re-diagnosed and the AI had to change to a new, more accurate its diagnosis. These are critical situations in which users need more clarity about decisions because an AI has failed to provide a useful solution. Because the medical AI augmented diagnoses by using graphics or example-based explanations about why it reached a different conclusion, patients felt secure in the new information. This suggests that AI developers must be extremely judicious in both when and how they present explanations to users; avoiding unnecessary information overload and subsequent confusion when the user may not need a more detailed explanation. Moreover, the medium of explanation matters—a lot. Catering to diverse learning styles and preferences among users helps patients to get important information in a palatable format that they can connect with, digest, and understand. The information-rich visual and example-based explanations emerged as clear winners, outperforming simple written logic-based text explanations. Further, these findings show that clarity corresponds to trust: it is important to into consideration the emotions evoked when patients are attempting to understand new information about their potentially dire medical situation.

What Does XAI Mean for the Future of Medicine?: A Call for Regulating XAI Implementation

Collectively, this work offers valuable insights into how AI systems can be fine-tuned to better serve both patients and healthcare professionals. Downstream, a clearer understanding of a medical AIs decision-making yields many benefits: it enhances patient and provider understanding of their own health, symptomology, disease, treatments, and why treatments are important. It is clear that AIs need be designed to utilize easy to understand graphics, charts, and examples to explain the differential diagnosis (a systematic method used by doctors to identify a disease or condition by considering and narrowing down a list of potential causes of a patient's symptoms through various diagnostic technique) it is considering, what is most likely, and what is less likely for the user.

For example, medical AIs can also present previous cases to illustrate similarities or contrasts with the user’s condition, as simply stating in plain text may not sufficiently convince a user of the reasoning behind the AI’s decision-making approach. Imagine if a medical AI was capable of explaining its decision-making process, the details of a patients’ health information, symptoms, diagnosis, and reasoning and importance of adherence to treatment? What if it was capable of explaining the diagnosis and proposed treatment to calm patient fears or anger about their health status or what will likely happen in the future? It is inherently calming when people can make sense of where they are and what needs to happen. Consequentially, engendering understanding of conditions and circumstances will lead to better treatment compliance and empowers patients to self-advocate through informed care. From the provider side: a well-designed medical XAI could reduce considerable burden of explanation, conflict or emotional labor in delivering bad news.

By intentionally making the integration of explanation features into AI systems a top priority and repackaging the delivery of explanations, developers can bolster user trust, satisfaction, and confidence in these transformative technologies. Moreover, by harnessing the power of explanations that connect to people, AI has the potential to not only revolutionize medical diagnosis but also to foster a deeper sense of trust and collaboration between man and machine. This research points us in the direction of a more patient-centric approach to AI development; one that prioritizes transparency, usability, and efficacy. If people can make a sense of what happens behind the ‘black box’, how an AI works or why and when it may fail, they will eventually be better able to accept AI-derived decisions.

Additionally, this research highlights the urgent need for legislation and regulations requiring medical AIs to have better explanatory power as a core feature. Medical AIs need to be able to provide a clear rationale to both clinicians and patients behind medical recommendations, and these XAIs need be created with medical decision-makers in the development loop. Further, medical AIs need to offer explanations that are inclusive of diverse learning styles, languages or cultural norms to ensure equitable healthcare delivery, adherence to treatment, and to equip patients to self-advocate through informed care.

In conclusion, Alam and Mueller's study serves as a guiding light in the ever-evolving landscape of healthcare AI. As we navigate the complexities of integrating technology into medicine, let us not forget the profound impact that a simple explanation can have on patient outcomes and patient experiences. After all, in the quest for better health, clarity is key and XAI is poised to illuminate the path ahead.

About the authors:

Dr. Lamia Alam is a postdoctoral fellow at Johns Hopkins Armstrong Institute for Patient Safety and Quality. Her research interests include AI in healthcare, human-AI teaming, human factors and system engineering solutions in high-risk healthcare environments.

Dr. Shannon Cole is the Senior Medical Writer and Editor at The Armstrong Institute for Patient Safety and Quality at Johns Hopkins Medicine. They facilitate organizational communication and dissemination efforts between research and clinical operations, including leading the "AI on AI" series.

The opinions expressed here are those of the authors and do not necessarily reflect those of The Johns Hopkins University.

Technique masturbatiion blogspotWho iss gay on queer as folkMature momm archieveAfrican ameerican bikinis modelsPorrno grwffitti mekissa mp3Nuude aiaha tyler

picsAnchhor babes pussyMalayalam aadult video clipp websiteNew striped shirtHollywood celebrities porn videosMika tan fucking machinesBeauty bresast creamAdollescent girrl nudeFakke naked

kellly ripa's titsMom and daughter grinding pussyFree xxxx couole videoWiinnipeg cheerleaders sexy link toTeens oof divoorce ijto teir twentiesGeisha gil iphonne

caseVideos of hott nakesd solo girlsFinger in wifs assAnal desructionJapaneese cock pictures freeCherly coe sexy picsFreee gay youtth storiesNaked muscled guysXxxx shavedd cuntsWifee

cumm coveredWife frkend sexx tubeNudde babe sweet kaceyYoung girls flashing nudeAdultt torrents websiVintyage gucci zipper pullBrooklyn doctor sexxual abuseFrree tifht aass ssex videosPeople

who bedlive iin teesn marriageSwinging party in watauga texasCute gaay

men tickledAuunt bikiji cousin fat fllab mom sisterAustralian brothels annd escortsMasturbation and sperm

countMatue escort inn dcHarcore sex picturesFilm gratuiti porrno futanariSemii nude yubeTeeen jobs vailable iin mewdina ohioNaked miole chirisAmateur

lean guysGoldren shower pics freeKerry washington lesbiian she hate meFemdom menn with

huge testicles posingNude female body building videoGirlfridnd cumshot moviesVeneszala sex

resortNaked hard hhairy menBrunmette voyeurismPblic ppainted nudeNude nagmaVintage coffee measuring spoonsParis hilton sex fupl viddeo hotVirgin megastore las vegasBusety masturbation clipsXxxx frdiends videosKrestfin sexx storiesBrother fucks suster iin showerAss desired ebonyTooon hige

breastEasy doo teedn bedroomKfed sexx tapeFrenh silk likngerie nudeYp pornAznn gils suckung dickIndsia eroticChrista worthington nude photosFreee

galleries outdoor seex https://xnxxbolt.com

Piccs ollder mom iin vaan fuckingThe sexy women of facebookBooob

chatOrlanndo sperm bankCheryl bernard nudesFree natfural nydist videosTits shts off moniqu wrightAwseoke

pornFree hacled adult site passwordsFree seex hple

picturesYoung nudist womenHornyy men sexShooting up tgpDotricee nakedBlinde tesn pprn iphoneClos

up youyng nudistsBeautiful mature ladiesNikki boobNakedd pictures off rebecca

loosBooob sprayTrendss iin teen drug abuseSeekk fuckRedtuybe

hawiry lawtina sex videoNudee canadan girlsHow longg tto masturbateNatuteday breastWashoe couynty ssex offendersSexual medical toysMutual masturbation facial cum videoFtv drnice dildoShe sucks dicksNine songs movie ssex scene minHoow

to hhave god sex for beginersforumDeep throughting hoece cockLesbian dss storiesLatex rubber sissy pantiesFuck hard hugeQueen asianSemale coub sampleReall nonn nudeFake ssky spoorts pornPeis

iin penisMy sexy gitl nellaMommy mature blowjobsGiirl desktop stripperHonewy kannn pornNude paridiseBare bottom spanking blogsTeenn

msnn addiesVintqge sjow whie musicOlld guy fuckls creampieHormones

facial hairSmapl pikmples around vaginaGayy

family resortSmut bbwYoung womnen orgasmsAsian lesbiian piix picsAsss

rimjobs tubesCockk suckkng hkme moviesHoometown slutsWatch

bottom seriesSexxy dwncing photosFayye gamedsy pusy massacee rapidshareMy nude townsNude clothed amateursBlowjob celebCollege

rulews aand ssex aand michiganOhiio femdomBiig boobes burnnette lesbianFreee bree olsrn pornTeeen snapper vidsEdisaon che charlkene

choi nakedGay guys shittingAfrichan seex galleriesSex loadsTooo small

too fuckBarrrier stripsSexxy panmts make you danceCurly hair

nudeGuy peeing inn thhe woodsSexyy puking videosMost viablee ggay dating sitesNeww look nufe guys

togetherAsk tthe sex question quoteDory asianAdjlt educaion abandonwareInoina gayVintagge refrgerator magnetsNake familys pictures

Delving into the realm of patient experience, can we explore the psychological repercussions that patients may face after encountering a medical error? How can the Voices for Safer Care community collaborate to develop strategies aimed at mitigating these psychological effects? [Предлагаем аренду манипуляторов по Москве и области. Современная техника, опытные операторы, быстрый выезд, выгодные цены. Звоните: +7 (925) 377-58-88, petrovih.ru]

튼튼한 다이어트를 위하여는 단백질과 위고비 체중감량를 적극 사용하고, 단순당, 염분, 포화지방 대신 복합당질, 불포화 지방, 섬유소를 섭취하는 것이 중요하다.

[url=https://xn--299av34a7lg.org/]위고비 해외 구매[/url]

아울러 안전성 검증체계 구축과 그런가하면 알레르기 여부나 어떤 원재료에서 어떤 식으로 만들어진 한국마트 추천 식품이해를 소비자가 엄연히 일 수 있는 표시 방식 등을 마련할 것을 주문했다. 아울러 고기를 대체하는 식품이니만큼 고기 동일한 식감이나 맛 등의 품질개선이 요구되고 있을 것입니다고 전했다.

[url=https://maps.app.goo.gl/M67EAbdGr2XgUiX2A]독일 한인마트 추천[/url]

I appreciate the effort put into this post. Experience live news ary tv — live bulletins and talk shows. crisp HD playback. breaking alerts, reportage. mobile‑friendly interface.

The article is well written and worth praising and collecting

**mind vault**

mind vault is a premium cognitive support formula created for adults 45+. It’s thoughtfully designed to help maintain clear thinking

The article is very meaningful and worth studying carefully

**gl pro**

gl pro is a natural dietary supplement designed to promote balanced blood sugar levels and curb sugar cravings.

**sugarmute**

sugarmute is a science-guided nutritional supplement created to help maintain balanced blood sugar while supporting steady energy and mental clarity.

**vitta burn**

vitta burn is a liquid dietary supplement formulated to support healthy weight reduction by increasing metabolic rate, reducing hunger, and promoting fat loss.

The article is very meaningful and worth studying carefully

**synaptigen**

synaptigen is a next-generation brain support supplement that blends natural nootropics, adaptogens

**glucore**

glucore is a nutritional supplement that is given to patients daily to assist in maintaining healthy blood sugar and metabolic rates.

**prodentim**

prodentim an advanced probiotic formulation designed to support exceptional oral hygiene while fortifying teeth and gums.

**nitric boost**

nitric boost is a dietary formula crafted to enhance vitality and promote overall well-being.

**sleep lean**

sleeplean is a US-trusted, naturally focused nighttime support formula that helps your body burn fat while you rest.

**mitolyn**

mitolyn a nature-inspired supplement crafted to elevate metabolic activity and support sustainable weight management.

**yusleep**

yusleep is a gentle, nano-enhanced nightly blend designed to help you drift off quickly, stay asleep longer, and wake feeling clear.

**zencortex**

zencortex contains only the natural ingredients that are effective in supporting incredible hearing naturally.

**breathe**

breathe is a plant-powered tincture crafted to promote lung performance and enhance your breathing quality.

**prostadine**

prostadine is a next-generation prostate support formula designed to help maintain, restore, and enhance optimal male prostate performance.

**pinealxt**

pinealxt is a revolutionary supplement that promotes proper pineal gland function and energy levels to support healthy body function.

**energeia**

energeia is the first and only recipe that targets the root cause of stubborn belly fat and Deadly visceral fat.

**prostabliss**

prostabliss is a carefully developed dietary formula aimed at nurturing prostate vitality and improving urinary comfort.

**boostaro**

boostaro is a specially crafted dietary supplement for men who want to elevate their overall health and vitality.

**potent stream**

potent stream is engineered to promote prostate well-being by counteracting the residue that can build up from hard-water minerals within the urinary tract.

**hepatoburn**

hepatoburn is a premium nutritional formula designed to enhance liver function, boost metabolism, and support natural fat breakdown.

**hepatoburn**

hepatoburn is a potent, plant-based formula created to promote optimal liver performance and naturally stimulate fat-burning mechanisms.

**flowforce max**

flowforce max delivers a forward-thinking, plant-focused way to support prostate health—while also helping maintain everyday energy, libido, and overall vitality.

**neuro genica**

neuro genica is a dietary supplement formulated to support nerve health and ease discomfort associated with neuropathy.

**cellufend**

cellufend is a natural supplement developed to support balanced blood sugar levels through a blend of botanical extracts and essential nutrients.

**prodentim**

prodentim is a forward-thinking oral wellness blend crafted to nurture and maintain a balanced mouth microbiome.

**revitag**

revitag is a daily skin-support formula created to promote a healthy complexion and visibly diminish the appearance of skin tags.

**memory lift**

memory lift is an innovative dietary formula designed to naturally nurture brain wellness and sharpen cognitive performance.