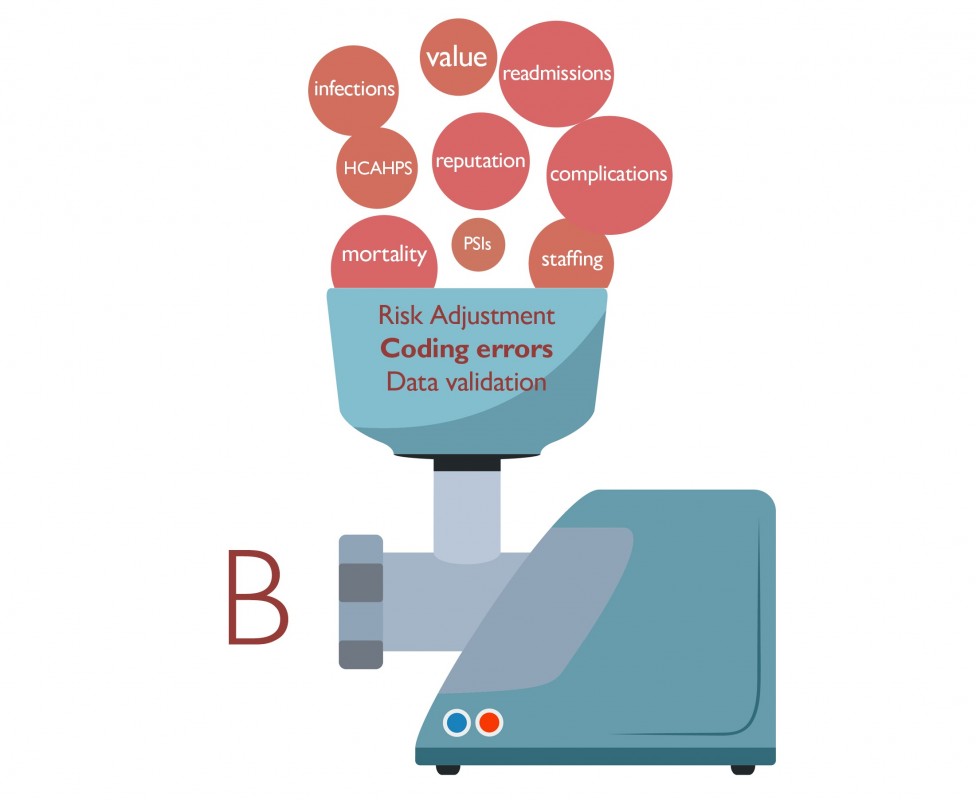

If you understand statistics and possess the intestinal fortitude to examine a ranking methodology, you will recognize that it involves ingredients that have to be recombined, repackaged and renamed. It's messy, like sausage-making.

This is not to say that the end product — hospital rankings — are distasteful. Patients deserve valid, transparent and timely information about quality of care so they can make informed decisions about whether and where to receive care. Ratings organizations like U.S. News & World Report work hard to create valid, unbiased hospital rankings out of imperfect data and measures. But the recipe needs to be right.

Patient safety indicators, a set of measures that reflect the incidence of various kinds of harm to hospital patients, is one ingredient I believe should be left out. These data are derived not from clinical records but from administrative codes in the bills sent to the federal Centers for Medicare and Medicaid Services, or CMS. Despite being broadly used in hospital ranking programs and pay-for-quality programs, patient safety indicators are notoriously inaccurate: They miss many harms while reporting false positives.

In a peer-reviewed paper published this spring in Medical Care, Johns Hopkins colleagues and I concluded that of 21 patient safety indicators, none can be considered scientifically valid.

Yet U.S. News wrote in a recent blog post that the inaccuracy of these measures might not pose such a vexing problem when it comes to comparing hospitals. If the frequency and degree of inaccuracies is similar across hospitals, according to this argument, then patient safety indicators can show how hospitals stack up against one another. If coding accuracy between hospitals is significantly different, however, that raises the question of whether these data should be used at all.

Recent research, as well as my hospital's own experiences in improving our coding, would suggest that we should not assume that hospitals' coding practices are relatively uniform. Coding accuracy, coding practices and patient and hospital characteristics can skew many different kinds of data sent to CMS.

A 2014 Cleveland Clinic study, for instance, found that differences in coding of severe pneumonia cases could result in more than 28 percent of hospitals being assigned the wrong mortality rating by CMS. In another study, published this year, researchers reported that in small rural hospitals with no stroke unit or team, diagnosis codes matched the clinical record in only 60 percent of ischemic stroke cases, while in large metropolitan hospitals with a stroke unit or team, the codes matched nearly 97 percent of the time. These variations may affect comparisons between hospitals but also alter reimbursement for stroke patients, the authors write.

Finally, hospitals deemed the highest quality by measures such as accreditation and better process and outcome performance are penalized more than five times as frequently for hospital-acquired conditions by CMS as hospitals scoring the worst. The researchers suggested that high-performing hospitals may simply look harder for adverse outcomes, and therefore find them more often. We experienced this first hand at The Johns Hopkins Hospital: After implementing a best practice for routine ultrasound screening for blood clots, the number of clots that we found increased tenfold.

I have no reason to believe that patient safety indicator coding is somehow an exception to this unevenness. At Johns Hopkins, we have reduced by 75 percent the number of patient safety indicator incidents that we report, saving millions in unimposed penalties and improving our public profile. About 10 percent of the improvement resulted from changes in clinical care. The other 90 percent resulted from documentation and coding that was more thorough and accurate. Other hospitals may not have the resources to take on this complex effort, or they may be unaware that their coding accuracy is a problem.

To their credit, U.S. News editors announced in late June that they were reducing the weight of the patient safety indicators-based patient safety score from 10 percent of a hospital's overall score to 5 percent. They also removed a particularly problematic patient safety indicator that tabulates the incidence of pressure ulcers.

We must not be satisfied with measures that only give us relative performance — how hospitals compare to one another. We need to have absolute measures of performance: How often are patients harmed? How often is a desired outcome achieved?

Certainly, it's good to know which hospitals or surgeons have better complication rates than others for hip replacements. But as a patient, don't you also want to know the absolute complication rate so you can decide whether to have surgery in the first place? If you're planning a prostatectomy, don't you want to know how frequently your surgeon's patients suffer from impotence afterwards? If you're a hospital leader, don't you want to know how your organization is progressing toward eliminating infections?

If health care used valid and reliable measures and audited the data hospitals provide — just as we audit financial data — hospitals would not have to get into the so-called coding game. And physicians might engage in quality improvement rather than be put off by the drive to look good. In the end, patients deserve quality measures that are more science and less sausage-making.

This post first appeared in U.S. News & World Report.

There should be a transparent examination of hospital ratings. Also of recognitions and awards. Anything that professes to represent quality and safety to the consumer. I have worked in many hospitals on the front line and can dispute these ratings and designations. When one challenges the ratings to the rater, in many cases they ignore you completly. One constant is the refusal to assess their own fallability. To re assess methodology. Flawed methodology does lie. Especially when an entity refuses efforts at correction. Patients deserve the truth.

There is very little incentive for hospitals to find and report adverse quality issues- Hopkins being a case in point. As long as penalties are imposed for adverse outcomes there will be little incentive to find and report them. Perhaps we should think outside the box and REWARD rather than punish organizations that determine and report adverse outcomes (a bit of the airline aviation analogy applies here). Only in that way will be determine what our issues are and how they can be corrected. Unfortunately I believe that the actual goal by CMS et al is not quality improvement but rather cost reduction.